nuke old implementation

This commit is contained in:

@@ -1,2 +0,0 @@

|

||||

models/

|

||||

db/

|

||||

26

.env

26

.env

@@ -1,26 +0,0 @@

|

||||

# Enable debug mode in the LocalAI API

|

||||

DEBUG=true

|

||||

|

||||

# Where models are stored

|

||||

MODELS_PATH=/models

|

||||

|

||||

# Galleries to use

|

||||

GALLERIES=[{"name":"model-gallery", "url":"github:go-skynet/model-gallery/index.yaml"}, {"url": "github:go-skynet/model-gallery/huggingface.yaml","name":"huggingface"}]

|

||||

|

||||

# Select model configuration in the config directory

|

||||

#PRELOAD_MODELS_CONFIG=/config/wizardlm-13b.yaml

|

||||

PRELOAD_MODELS_CONFIG=/config/wizardlm-13b.yaml

|

||||

#PRELOAD_MODELS_CONFIG=/config/wizardlm-13b-superhot.yaml

|

||||

|

||||

# You don't need to put a valid OpenAI key, however, the python libraries expect

|

||||

# the string to be set or panics

|

||||

OPENAI_API_KEY=sk---

|

||||

|

||||

# Set the OpenAI API base URL to point to LocalAI

|

||||

DEFAULT_API_BASE=http://api:8080

|

||||

|

||||

# Set an image path

|

||||

IMAGE_PATH=/tmp

|

||||

|

||||

# Set number of default threads

|

||||

THREADS=14

|

||||

142

.github/workflows/image.yaml

vendored

142

.github/workflows/image.yaml

vendored

@@ -1,142 +0,0 @@

|

||||

---

|

||||

name: 'build container images'

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

|

||||

jobs:

|

||||

localagi:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v3

|

||||

|

||||

- name: Prepare

|

||||

id: prep

|

||||

run: |

|

||||

DOCKER_IMAGE=quay.io/go-skynet/localagi

|

||||

VERSION=main

|

||||

SHORTREF=${GITHUB_SHA::8}

|

||||

|

||||

# If this is git tag, use the tag name as a docker tag

|

||||

if [[ $GITHUB_REF == refs/tags/* ]]; then

|

||||

VERSION=${GITHUB_REF#refs/tags/}

|

||||

fi

|

||||

TAGS="${DOCKER_IMAGE}:${VERSION},${DOCKER_IMAGE}:${SHORTREF}"

|

||||

|

||||

# If the VERSION looks like a version number, assume that

|

||||

# this is the most recent version of the image and also

|

||||

# tag it 'latest'.

|

||||

if [[ $VERSION =~ ^v[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}$ ]]; then

|

||||

TAGS="$TAGS,${DOCKER_IMAGE}:latest"

|

||||

fi

|

||||

|

||||

# Set output parameters.

|

||||

echo ::set-output name=tags::${TAGS}

|

||||

echo ::set-output name=docker_image::${DOCKER_IMAGE}

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@master

|

||||

with:

|

||||

platforms: all

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

id: buildx

|

||||

uses: docker/setup-buildx-action@master

|

||||

|

||||

- name: Login to DockerHub

|

||||

if: github.event_name != 'pull_request'

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

registry: quay.io

|

||||

username: ${{ secrets.QUAY_USERNAME }}

|

||||

password: ${{ secrets.QUAY_PASSWORD }}

|

||||

- name: Build

|

||||

if: github.event_name != 'pull_request'

|

||||

uses: docker/build-push-action@v4

|

||||

with:

|

||||

builder: ${{ steps.buildx.outputs.name }}

|

||||

context: .

|

||||

file: ./Dockerfile

|

||||

platforms: linux/amd64

|

||||

push: true

|

||||

tags: ${{ steps.prep.outputs.tags }}

|

||||

- name: Build PRs

|

||||

if: github.event_name == 'pull_request'

|

||||

uses: docker/build-push-action@v4

|

||||

with:

|

||||

builder: ${{ steps.buildx.outputs.name }}

|

||||

context: .

|

||||

file: ./Dockerfile

|

||||

platforms: linux/amd64

|

||||

push: false

|

||||

tags: ${{ steps.prep.outputs.tags }}

|

||||

discord-localagi:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v3

|

||||

|

||||

- name: Prepare

|

||||

id: prep

|

||||

run: |

|

||||

DOCKER_IMAGE=quay.io/go-skynet/localagi-discord

|

||||

VERSION=main

|

||||

SHORTREF=${GITHUB_SHA::8}

|

||||

|

||||

# If this is git tag, use the tag name as a docker tag

|

||||

if [[ $GITHUB_REF == refs/tags/* ]]; then

|

||||

VERSION=${GITHUB_REF#refs/tags/}

|

||||

fi

|

||||

TAGS="${DOCKER_IMAGE}:${VERSION},${DOCKER_IMAGE}:${SHORTREF}"

|

||||

|

||||

# If the VERSION looks like a version number, assume that

|

||||

# this is the most recent version of the image and also

|

||||

# tag it 'latest'.

|

||||

if [[ $VERSION =~ ^v[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}$ ]]; then

|

||||

TAGS="$TAGS,${DOCKER_IMAGE}:latest"

|

||||

fi

|

||||

|

||||

# Set output parameters.

|

||||

echo ::set-output name=tags::${TAGS}

|

||||

echo ::set-output name=docker_image::${DOCKER_IMAGE}

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@master

|

||||

with:

|

||||

platforms: all

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

id: buildx

|

||||

uses: docker/setup-buildx-action@master

|

||||

|

||||

- name: Login to DockerHub

|

||||

if: github.event_name != 'pull_request'

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

registry: quay.io

|

||||

username: ${{ secrets.QUAY_USERNAME }}

|

||||

password: ${{ secrets.QUAY_PASSWORD }}

|

||||

- name: Build

|

||||

if: github.event_name != 'pull_request'

|

||||

uses: docker/build-push-action@v4

|

||||

with:

|

||||

builder: ${{ steps.buildx.outputs.name }}

|

||||

context: ./examples/discord

|

||||

file: ./examples/discord/Dockerfile

|

||||

platforms: linux/amd64

|

||||

push: true

|

||||

tags: ${{ steps.prep.outputs.tags }}

|

||||

- name: Build PRs

|

||||

if: github.event_name == 'pull_request'

|

||||

uses: docker/build-push-action@v4

|

||||

with:

|

||||

builder: ${{ steps.buildx.outputs.name }}

|

||||

context: ./examples/discord

|

||||

file: ./examples/discord/Dockerfile

|

||||

platforms: linux/amd64

|

||||

push: false

|

||||

tags: ${{ steps.prep.outputs.tags }}

|

||||

4

.gitignore

vendored

4

.gitignore

vendored

@@ -1,4 +0,0 @@

|

||||

db/

|

||||

models/

|

||||

config.ini

|

||||

.dockerenv

|

||||

18

Dockerfile

18

Dockerfile

@@ -1,18 +0,0 @@

|

||||

FROM python:3.10-bullseye

|

||||

WORKDIR /app

|

||||

COPY ./requirements.txt /app/requirements.txt

|

||||

RUN pip install --no-cache-dir -r requirements.txt

|

||||

|

||||

|

||||

ENV DEBIAN_FRONTEND noninteractive

|

||||

|

||||

# Install package dependencies

|

||||

RUN apt-get update -y && \

|

||||

apt-get install -y --no-install-recommends \

|

||||

alsa-utils \

|

||||

libsndfile1-dev && \

|

||||

apt-get clean

|

||||

|

||||

COPY . /app

|

||||

RUN pip install .

|

||||

ENTRYPOINT [ "python", "./main.py" ];

|

||||

21

LICENSE

21

LICENSE

@@ -1,21 +0,0 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2023 Ettore Di Giacinto

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

184

README.md

184

README.md

@@ -1,184 +0,0 @@

|

||||

|

||||

<h1 align="center">

|

||||

<br>

|

||||

<img height="300" src="https://github.com/mudler/LocalAGI/assets/2420543/b69817ce-2361-4234-a575-8f578e159f33"> <br>

|

||||

LocalAGI

|

||||

<br>

|

||||

</h1>

|

||||

|

||||

[AutoGPT](https://github.com/Significant-Gravitas/Auto-GPT), [babyAGI](https://github.com/yoheinakajima/babyagi), ... and now LocalAGI!

|

||||

|

||||

LocalAGI is a small 🤖 virtual assistant that you can run locally, made by the [LocalAI](https://github.com/go-skynet/LocalAI) author and powered by it.

|

||||

|

||||

The goal is:

|

||||

- Keep it simple, hackable and easy to understand

|

||||

- No API keys needed, No cloud services needed, 100% Local. Tailored for Local use, however still compatible with OpenAI.

|

||||

- Smart-agent/virtual assistant that can do tasks

|

||||

- Small set of dependencies

|

||||

- Run with Docker/Podman/Containers

|

||||

- Rather than trying to do everything, provide a good starting point for other projects

|

||||

|

||||

Note: Be warned! It was hacked in a weekend, and it's just an experiment to see what can be done with local LLMs.

|

||||

|

||||

|

||||

|

||||

## 🚀 Features

|

||||

|

||||

- 🧠 LLM for intent detection

|

||||

- 🧠 Uses functions for actions

|

||||

- 📝 Write to long-term memory

|

||||

- 📖 Read from long-term memory

|

||||

- 🌐 Internet access for search

|

||||

- :card_file_box: Write files

|

||||

- 🔌 Plan steps to achieve a goal

|

||||

- 🤖 Avatar creation with Stable Diffusion

|

||||

- 🗨️ Conversational

|

||||

- 🗣️ Voice synthesis with TTS

|

||||

|

||||

## Demo

|

||||

|

||||

Search on internet (interactive mode)

|

||||

|

||||

https://github.com/mudler/LocalAGI/assets/2420543/23199ca3-7380-4efc-9fac-a6bc2b52bdb3

|

||||

|

||||

Plan a road trip (batch mode)

|

||||

|

||||

https://github.com/mudler/LocalAGI/assets/2420543/9ba43b82-dec5-432a-bdb9-8318e7db59a4

|

||||

|

||||

> Note: The demo is with a GPU and `30b` models size

|

||||

|

||||

## :book: Quick start

|

||||

|

||||

No frills, just run docker-compose and start chatting with your virtual assistant:

|

||||

|

||||

```bash

|

||||

# Modify the configuration

|

||||

# vim .env

|

||||

# first run (and pulling the container)

|

||||

docker-compose up

|

||||

# next runs

|

||||

docker-compose run -i --rm localagi

|

||||

```

|

||||

|

||||

## How to use it

|

||||

|

||||

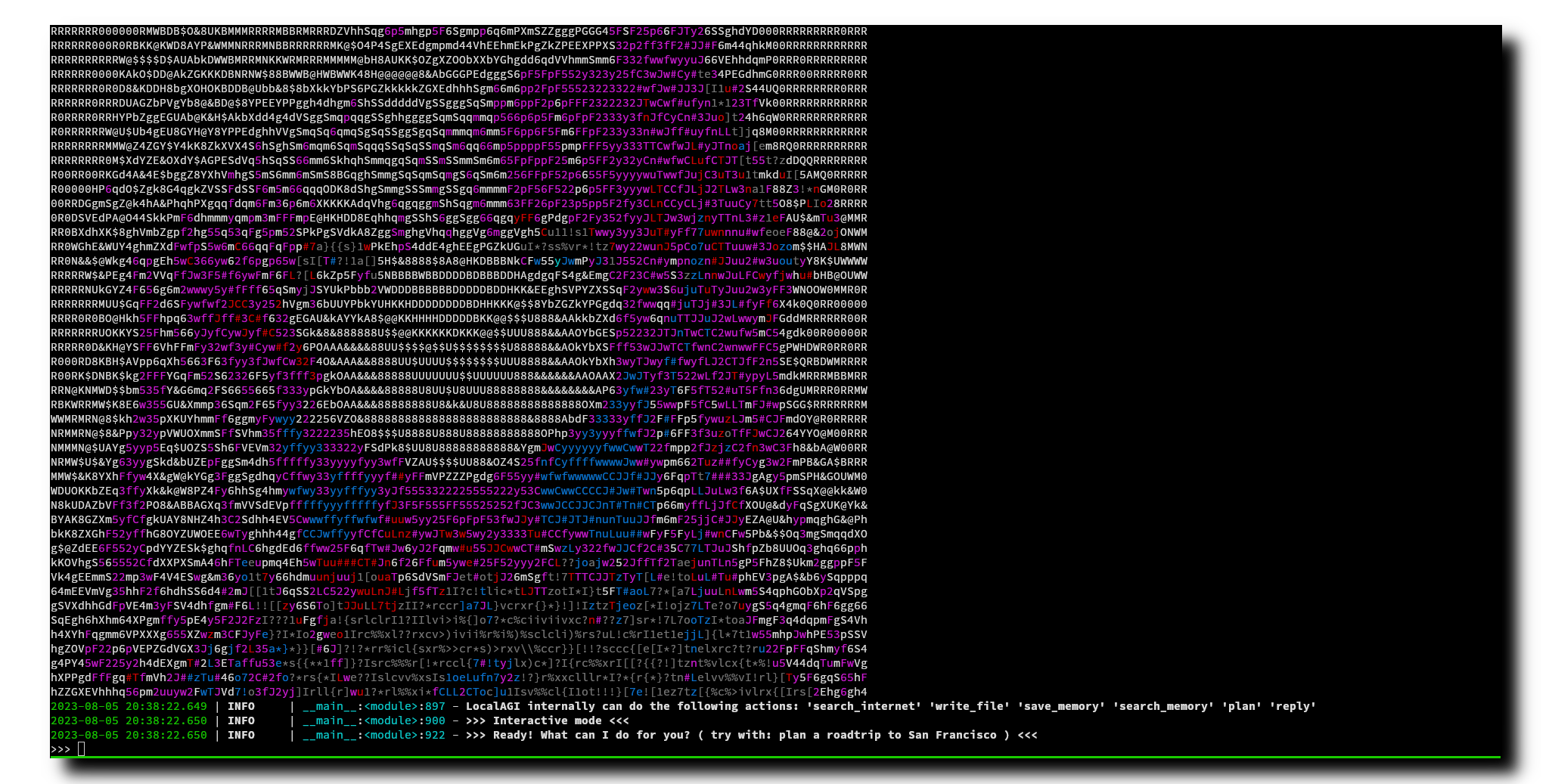

By default localagi starts in interactive mode

|

||||

|

||||

### Examples

|

||||

|

||||

Road trip planner by limiting searching to internet to 3 results only:

|

||||

|

||||

```bash

|

||||

docker-compose run -i --rm localagi \

|

||||

--skip-avatar \

|

||||

--subtask-context \

|

||||

--postprocess \

|

||||

--search-results 3 \

|

||||

--prompt "prepare a plan for my roadtrip to san francisco"

|

||||

```

|

||||

|

||||

Limit results of planning to 3 steps:

|

||||

|

||||

```bash

|

||||

docker-compose run -i --rm localagi \

|

||||

--skip-avatar \

|

||||

--subtask-context \

|

||||

--postprocess \

|

||||

--search-results 1 \

|

||||

--prompt "do a plan for my roadtrip to san francisco" \

|

||||

--plan-message "The assistant replies with a plan of 3 steps to answer the request with a list of subtasks with logical steps. The reasoning includes a self-contained, detailed and descriptive instruction to fullfill the task."

|

||||

```

|

||||

|

||||

### Advanced

|

||||

|

||||

localagi has several options in the CLI to tweak the experience:

|

||||

|

||||

- `--system-prompt` is the system prompt to use. If not specified, it will use none.

|

||||

- `--prompt` is the prompt to use for batch mode. If not specified, it will default to interactive mode.

|

||||

- `--interactive` is the interactive mode. When used with `--prompt` will drop you in an interactive session after the first prompt is evaluated.

|

||||

- `--skip-avatar` will skip avatar creation. Useful if you want to run it in a headless environment.

|

||||

- `--re-evaluate` will re-evaluate if another action is needed or we have completed the user request.

|

||||

- `--postprocess` will postprocess the reasoning for analysis.

|

||||

- `--subtask-context` will include context in subtasks.

|

||||

- `--search-results` is the number of search results to use.

|

||||

- `--plan-message` is the message to use during planning. You can override the message for example to force a plan to have a different message.

|

||||

- `--tts-api-base` is the TTS API base. Defaults to `http://api:8080`.

|

||||

- `--localai-api-base` is the LocalAI API base. Defaults to `http://api:8080`.

|

||||

- `--images-api-base` is the Images API base. Defaults to `http://api:8080`.

|

||||

- `--embeddings-api-base` is the Embeddings API base. Defaults to `http://api:8080`.

|

||||

- `--functions-model` is the functions model to use. Defaults to `functions`.

|

||||

- `--embeddings-model` is the embeddings model to use. Defaults to `all-MiniLM-L6-v2`.

|

||||

- `--llm-model` is the LLM model to use. Defaults to `gpt-4`.

|

||||

- `--tts-model` is the TTS model to use. Defaults to `en-us-kathleen-low.onnx`.

|

||||

- `--stablediffusion-model` is the Stable Diffusion model to use. Defaults to `stablediffusion`.

|

||||

- `--stablediffusion-prompt` is the Stable Diffusion prompt to use. Defaults to `DEFAULT_PROMPT`.

|

||||

- `--force-action` will force a specific action.

|

||||

- `--debug` will enable debug mode.

|

||||

|

||||

### Customize

|

||||

|

||||

To use a different model, you can see the examples in the `config` folder.

|

||||

To select a model, modify the `.env` file and change the `PRELOAD_MODELS_CONFIG` variable to use a different configuration file.

|

||||

|

||||

### Caveats

|

||||

|

||||

The "goodness" of a model has a big impact on how LocalAGI works. Currently `13b` models are powerful enough to actually able to perform multi-step tasks or do more actions. However, it is quite slow when running on CPU (no big surprise here).

|

||||

|

||||

The context size is a limitation - you can find in the `config` examples to run with superhot 8k context size, but the quality is not good enough to perform complex tasks.

|

||||

|

||||

## What is LocalAGI?

|

||||

|

||||

It is a dead simple experiment to show how to tie the various LocalAI functionalities to create a virtual assistant that can do tasks. It is simple on purpose, trying to be minimalistic and easy to understand and customize for everyone.

|

||||

|

||||

It is different from babyAGI or AutoGPT as it uses [LocalAI functions](https://localai.io/features/openai-functions/) - it is a from scratch attempt built on purpose to run locally with [LocalAI](https://localai.io) (no API keys needed!) instead of expensive, cloud services. It sets apart from other projects as it strives to be small, and easy to fork on.

|

||||

|

||||

### How it works?

|

||||

|

||||

`LocalAGI` just does the minimal around LocalAI functions to create a virtual assistant that can do generic tasks. It works by an endless loop of `intent detection`, `function invocation`, `self-evaluation` and `reply generation` (if it decides to reply! :)). The agent is capable of planning complex tasks by invoking multiple functions, and remember things from the conversation.

|

||||

|

||||

In a nutshell, it goes like this:

|

||||

|

||||

- Decide based on the conversation history if it needs to take an action by using functions. It uses the LLM to detect the intent from the conversation.

|

||||

- if it need to take an action (e.g. "remember something from the conversation" ) or generate complex tasks ( executing a chain of functions to achieve a goal ) it invokes the functions

|

||||

- it re-evaluates if it needs to do any other action

|

||||

- return the result back to the LLM to generate a reply for the user

|

||||

|

||||

Under the hood LocalAI converts functions to llama.cpp BNF grammars. While OpenAI fine-tuned a model to reply to functions, LocalAI constrains the LLM to follow grammars. This is a much more efficient way to do it, and it is also more flexible as you can define your own functions and grammars. For learning more about this, check out the [LocalAI documentation](https://localai.io/docs/llm) and my tweet that explains how it works under the hoods: https://twitter.com/mudler_it/status/1675524071457533953.

|

||||

|

||||

### Agent functions

|

||||

|

||||

The intention of this project is to keep the agent minimal, so can be built on top of it or forked. The agent is capable of doing the following functions:

|

||||

- remember something from the conversation

|

||||

- recall something from the conversation

|

||||

- search something from the internet

|

||||

- plan a complex task by invoking multiple functions

|

||||

- write files to disk

|

||||

|

||||

## Roadmap

|

||||

|

||||

- [x] 100% Local, with Local AI. NO API KEYS NEEDED!

|

||||

- [x] Create a simple virtual assistant

|

||||

- [x] Make the virtual assistant do functions like store long-term memory and autonomously search between them when needed

|

||||

- [x] Create the assistant avatar with Stable Diffusion

|

||||

- [x] Give it a voice

|

||||

- [ ] Use weaviate instead of Chroma

|

||||

- [ ] Get voice input (push to talk or wakeword)

|

||||

- [ ] Make a REST API (OpenAI compliant?) so can be plugged by e.g. a third party service

|

||||

- [x] Take a system prompt so can act with a "character" (e.g. "answer in rick and morty style")

|

||||

|

||||

## Development

|

||||

|

||||

Run docker-compose with main.py checked-out:

|

||||

|

||||

```bash

|

||||

docker-compose run -v main.py:/app/main.py -i --rm localagi

|

||||

```

|

||||

|

||||

## Notes

|

||||

|

||||

- a 13b model is enough for doing contextualized research and search/retrieve memory

|

||||

- a 30b model is enough to generate a roadmap trip plan ( so cool! )

|

||||

- With superhot models looses its magic, but maybe suitable for search

|

||||

- Context size is your enemy. `--postprocess` some times helps, but not always

|

||||

- It can be silly!

|

||||

- It is slow on CPU, don't expect `7b` models to perform good, and `13b` models perform better but on CPU are quite slow.

|

||||

@@ -1,45 +0,0 @@

|

||||

- id: huggingface@TheBloke/WizardLM-13B-V1.1-GGML/wizardlm-13b-v1.1.ggmlv3.q5_K_M.bin

|

||||

name: "gpt-4"

|

||||

overrides:

|

||||

context_size: 2048

|

||||

mmap: true

|

||||

f16: true

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

- id: model-gallery@stablediffusion

|

||||

- id: model-gallery@voice-en-us-kathleen-low

|

||||

- url: github:go-skynet/model-gallery/base.yaml

|

||||

name: all-MiniLM-L6-v2

|

||||

overrides:

|

||||

embeddings: true

|

||||

backend: huggingface-embeddings

|

||||

parameters:

|

||||

model: all-MiniLM-L6-v2

|

||||

- id: huggingface@TheBloke/WizardLM-13B-V1.1-GGML/wizardlm-13b-v1.1.ggmlv3.q5_K_M.bin

|

||||

name: functions

|

||||

overrides:

|

||||

context_size: 2048

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

template:

|

||||

chat: ""

|

||||

completion: ""

|

||||

roles:

|

||||

assistant: "ASSISTANT:"

|

||||

system: "SYSTEM:"

|

||||

assistant_function_call: "FUNCTION_CALL:"

|

||||

function: "FUNCTION CALL RESULT:"

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

function:

|

||||

disable_no_action: true

|

||||

mmap: true

|

||||

f16: true

|

||||

@@ -1,47 +0,0 @@

|

||||

- id: huggingface@TheBloke/WizardLM-13B-V1-0-Uncensored-SuperHOT-8K-GGML/wizardlm-13b-v1.0-superhot-8k.ggmlv3.q4_K_M.bin

|

||||

name: "gpt-4"

|

||||

overrides:

|

||||

context_size: 8192

|

||||

mmap: true

|

||||

f16: true

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

rope_freq_scale: 0.25

|

||||

- id: model-gallery@stablediffusion

|

||||

- id: model-gallery@voice-en-us-kathleen-low

|

||||

- url: github:go-skynet/model-gallery/base.yaml

|

||||

name: all-MiniLM-L6-v2

|

||||

overrides:

|

||||

embeddings: true

|

||||

backend: huggingface-embeddings

|

||||

parameters:

|

||||

model: all-MiniLM-L6-v2

|

||||

- id: huggingface@TheBloke/WizardLM-13B-V1-0-Uncensored-SuperHOT-8K-GGML/wizardlm-13b-v1.0-superhot-8k.ggmlv3.q4_K_M.bin

|

||||

name: functions

|

||||

overrides:

|

||||

context_size: 8192

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

template:

|

||||

chat: ""

|

||||

completion: ""

|

||||

roles:

|

||||

assistant: "ASSISTANT:"

|

||||

system: "SYSTEM:"

|

||||

assistant_function_call: "FUNCTION_CALL:"

|

||||

function: "FUNCTION CALL RESULT:"

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

rope_freq_scale: 0.25

|

||||

function:

|

||||

disable_no_action: true

|

||||

mmap: true

|

||||

f16: true

|

||||

@@ -1,45 +0,0 @@

|

||||

- id: huggingface@thebloke/wizardlm-13b-v1.0-uncensored-ggml/wizardlm-13b-v1.0-uncensored.ggmlv3.q4_k_m.bin

|

||||

name: "gpt-4"

|

||||

overrides:

|

||||

context_size: 2048

|

||||

mmap: true

|

||||

f16: true

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

- id: model-gallery@stablediffusion

|

||||

- id: model-gallery@voice-en-us-kathleen-low

|

||||

- url: github:go-skynet/model-gallery/base.yaml

|

||||

name: all-MiniLM-L6-v2

|

||||

overrides:

|

||||

embeddings: true

|

||||

backend: huggingface-embeddings

|

||||

parameters:

|

||||

model: all-MiniLM-L6-v2

|

||||

- id: huggingface@thebloke/wizardlm-13b-v1.0-uncensored-ggml/wizardlm-13b-v1.0-uncensored.ggmlv3.q4_0.bin

|

||||

name: functions

|

||||

overrides:

|

||||

context_size: 2048

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

template:

|

||||

chat: ""

|

||||

completion: ""

|

||||

roles:

|

||||

assistant: "ASSISTANT:"

|

||||

system: "SYSTEM:"

|

||||

assistant_function_call: "FUNCTION_CALL:"

|

||||

function: "FUNCTION CALL RESULT:"

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

function:

|

||||

disable_no_action: true

|

||||

mmap: true

|

||||

f16: true

|

||||

@@ -1,47 +0,0 @@

|

||||

- id: huggingface@TheBloke/WizardLM-Uncensored-SuperCOT-StoryTelling-30B-SuperHOT-8K-GGML/WizardLM-Uncensored-SuperCOT-StoryTelling-30b-superhot-8k.ggmlv3.q4_0.bin

|

||||

name: "gpt-4"

|

||||

overrides:

|

||||

context_size: 8192

|

||||

mmap: true

|

||||

f16: true

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

rope_freq_scale: 0.25

|

||||

- id: model-gallery@stablediffusion

|

||||

- id: model-gallery@voice-en-us-kathleen-low

|

||||

- url: github:go-skynet/model-gallery/base.yaml

|

||||

name: all-MiniLM-L6-v2

|

||||

overrides:

|

||||

embeddings: true

|

||||

backend: huggingface-embeddings

|

||||

parameters:

|

||||

model: all-MiniLM-L6-v2

|

||||

- id: huggingface@TheBloke/WizardLM-Uncensored-SuperCOT-StoryTelling-30B-SuperHOT-8K-GGML/WizardLM-Uncensored-SuperCOT-StoryTelling-30b-superhot-8k.ggmlv3.q4_0.bin

|

||||

name: functions

|

||||

overrides:

|

||||

context_size: 8192

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

template:

|

||||

chat: ""

|

||||

completion: ""

|

||||

roles:

|

||||

assistant: "ASSISTANT:"

|

||||

system: "SYSTEM:"

|

||||

assistant_function_call: "FUNCTION_CALL:"

|

||||

function: "FUNCTION CALL RESULT:"

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

rope_freq_scale: 0.25

|

||||

function:

|

||||

disable_no_action: true

|

||||

mmap: true

|

||||

f16: true

|

||||

@@ -1,46 +0,0 @@

|

||||

- id: huggingface@thebloke/wizardlm-30b-uncensored-ggml/wizardlm-30b-uncensored.ggmlv3.q2_k.bin

|

||||

galleryModel:

|

||||

name: "gpt-4"

|

||||

overrides:

|

||||

context_size: 4096

|

||||

mmap: true

|

||||

f16: true

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

- id: model-gallery@stablediffusion

|

||||

- id: model-gallery@voice-en-us-kathleen-low

|

||||

- url: github:go-skynet/model-gallery/base.yaml

|

||||

name: all-MiniLM-L6-v2

|

||||

overrides:

|

||||

embeddings: true

|

||||

backend: huggingface-embeddings

|

||||

parameters:

|

||||

model: all-MiniLM-L6-v2

|

||||

- id: huggingface@thebloke/wizardlm-30b-uncensored-ggml/wizardlm-30b-uncensored.ggmlv3.q2_k.bin

|

||||

name: functions

|

||||

overrides:

|

||||

context_size: 4096

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

template:

|

||||

chat: ""

|

||||

completion: ""

|

||||

roles:

|

||||

assistant: "ASSISTANT:"

|

||||

system: "SYSTEM:"

|

||||

assistant_function_call: "FUNCTION_CALL:"

|

||||

function: "FUNCTION CALL RESULT:"

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

function:

|

||||

disable_no_action: true

|

||||

mmap: true

|

||||

f16: true

|

||||

@@ -1,45 +0,0 @@

|

||||

- id: huggingface@thebloke/wizardlm-7b-v1.0-uncensored-ggml/wizardlm-7b-v1.0-uncensored.ggmlv3.q4_k_m.bin

|

||||

name: "gpt-4"

|

||||

overrides:

|

||||

context_size: 2048

|

||||

mmap: true

|

||||

f16: true

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

- id: model-gallery@stablediffusion

|

||||

- id: model-gallery@voice-en-us-kathleen-low

|

||||

- url: github:go-skynet/model-gallery/base.yaml

|

||||

name: all-MiniLM-L6-v2

|

||||

overrides:

|

||||

embeddings: true

|

||||

backend: huggingface-embeddings

|

||||

parameters:

|

||||

model: all-MiniLM-L6-v2

|

||||

- id: huggingface@thebloke/wizardlm-7b-v1.0-uncensored-ggml/wizardlm-7b-v1.0-uncensored.ggmlv3.q4_0.bin

|

||||

name: functions

|

||||

overrides:

|

||||

context_size: 2048

|

||||

mirostat: 2

|

||||

mirostat_tau: 5.0

|

||||

mirostat_eta: 0.1

|

||||

template:

|

||||

chat: ""

|

||||

completion: ""

|

||||

roles:

|

||||

assistant: "ASSISTANT:"

|

||||

system: "SYSTEM:"

|

||||

assistant_function_call: "FUNCTION_CALL:"

|

||||

function: "FUNCTION CALL RESULT:"

|

||||

parameters:

|

||||

temperature: 0.1

|

||||

top_k: 40

|

||||

top_p: 0.95

|

||||

function:

|

||||

disable_no_action: true

|

||||

mmap: true

|

||||

f16: true

|

||||

@@ -1,31 +0,0 @@

|

||||

version: "3.9"

|

||||

services:

|

||||

api:

|

||||

image: quay.io/go-skynet/local-ai:master

|

||||

healthcheck:

|

||||

test: ["CMD", "curl", "-f", "http://localhost:8080/readyz"]

|

||||

interval: 1m

|

||||

timeout: 120m

|

||||

retries: 120

|

||||

ports:

|

||||

- 8090:8080

|

||||

env_file:

|

||||

- .env

|

||||

volumes:

|

||||

- ./models:/models:cached

|

||||

- ./config:/config:cached

|

||||

command: ["/usr/bin/local-ai" ]

|

||||

localagi:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile

|

||||

devices:

|

||||

- /dev/snd

|

||||

depends_on:

|

||||

api:

|

||||

condition: service_healthy

|

||||

volumes:

|

||||

- ./db:/app/db

|

||||

- ./data:/data

|

||||

env_file:

|

||||

- .env

|

||||

@@ -1,8 +0,0 @@

|

||||

FROM python:3.10-bullseye

|

||||

WORKDIR /app

|

||||

COPY ./requirements.txt /app/requirements.txt

|

||||

RUN pip install --no-cache-dir -r requirements.txt

|

||||

|

||||

COPY . /app

|

||||

|

||||

ENTRYPOINT [ "python", "./main.py" ];

|

||||

@@ -1,371 +0,0 @@

|

||||

import openai

|

||||

#from langchain.embeddings import HuggingFaceEmbeddings

|

||||

from langchain.embeddings import LocalAIEmbeddings

|

||||

|

||||

from langchain.document_loaders import (

|

||||

SitemapLoader,

|

||||

# GitHubIssuesLoader,

|

||||

# GitLoader,

|

||||

)

|

||||

|

||||

import uuid

|

||||

import sys

|

||||

from config import config

|

||||

|

||||

from queue import Queue

|

||||

import asyncio

|

||||

import threading

|

||||

from localagi import LocalAGI

|

||||

from loguru import logger

|

||||

from ascii_magic import AsciiArt

|

||||

from duckduckgo_search import DDGS

|

||||

from typing import Dict, List

|

||||

import os

|

||||

from langchain.text_splitter import RecursiveCharacterTextSplitter

|

||||

import discord

|

||||

import openai

|

||||

import urllib.request

|

||||

from datetime import datetime

|

||||

import json

|

||||

import os

|

||||

from io import StringIO

|

||||

FILE_NAME_FORMAT = '%Y_%m_%d_%H_%M_%S'

|

||||

|

||||

EMBEDDINGS_MODEL = config["agent"]["embeddings_model"]

|

||||

EMBEDDINGS_API_BASE = config["agent"]["embeddings_api_base"]

|

||||

PERSISTENT_DIR = config["agent"]["persistent_dir"]

|

||||

MILVUS_HOST = config["agent"]["milvus_host"] if "milvus_host" in config["agent"] else ""

|

||||

MILVUS_PORT = config["agent"]["milvus_port"] if "milvus_port" in config["agent"] else 0

|

||||

MEMORY_COLLECTION = config["agent"]["memory_collection"]

|

||||

DB_DIR = config["agent"]["db_dir"]

|

||||

MEMORY_CHUNK_SIZE = int(config["agent"]["memory_chunk_size"])

|

||||

MEMORY_CHUNK_OVERLAP = int(config["agent"]["memory_chunk_overlap"])

|

||||

MEMORY_RESULTS = int(config["agent"]["memory_results"])

|

||||

MEMORY_SEARCH_TYPE = config["agent"]["memory_search_type"]

|

||||

|

||||

if not os.environ.get("PYSQL_HACK", "false") == "false":

|

||||

# these three lines swap the stdlib sqlite3 lib with the pysqlite3 package for chroma

|

||||

__import__('pysqlite3')

|

||||

import sys

|

||||

sys.modules['sqlite3'] = sys.modules.pop('pysqlite3')

|

||||

if MILVUS_HOST == "":

|

||||

from langchain.vectorstores import Chroma

|

||||

else:

|

||||

from langchain.vectorstores import Milvus

|

||||

|

||||

embeddings = LocalAIEmbeddings(model=EMBEDDINGS_MODEL,openai_api_base=EMBEDDINGS_API_BASE)

|

||||

|

||||

loop = None

|

||||

channel = None

|

||||

def call(thing):

|

||||

return asyncio.run_coroutine_threadsafe(thing,loop).result()

|

||||

|

||||

def ingest(a, agent_actions={}, localagi=None):

|

||||

q = json.loads(a)

|

||||

chunk_size = MEMORY_CHUNK_SIZE

|

||||

chunk_overlap = MEMORY_CHUNK_OVERLAP

|

||||

logger.info(">>> ingesting: ")

|

||||

logger.info(q)

|

||||

documents = []

|

||||

sitemap_loader = SitemapLoader(web_path=q["url"])

|

||||

text_splitter = RecursiveCharacterTextSplitter(chunk_size=chunk_size, chunk_overlap=chunk_overlap)

|

||||

documents.extend(sitemap_loader.load())

|

||||

texts = text_splitter.split_documents(documents)

|

||||

if MILVUS_HOST == "":

|

||||

db = Chroma.from_documents(texts,embeddings,collection_name=MEMORY_COLLECTION, persist_directory=DB_DIR)

|

||||

db.persist()

|

||||

db = None

|

||||

else:

|

||||

Milvus.from_documents(texts,embeddings,collection_name=MEMORY_COLLECTION, connection_args={"host": MILVUS_HOST, "port": MILVUS_PORT})

|

||||

return f"Documents ingested"

|

||||

|

||||

def create_image(a, agent_actions={}, localagi=None):

|

||||

q = json.loads(a)

|

||||

logger.info(">>> creating image: ")

|

||||

logger.info(q["description"])

|

||||

size=f"{q['width']}x{q['height']}"

|

||||

response = openai.Image.create(prompt=q["description"], n=1, size=size)

|

||||

image_url = response["data"][0]["url"]

|

||||

image_name = download_image(image_url)

|

||||

image_path = f"{PERSISTENT_DIR}{image_name}"

|

||||

|

||||

file = discord.File(image_path, filename=image_name)

|

||||

embed = discord.Embed(title="Generated image")

|

||||

embed.set_image(url=f"attachment://{image_name}")

|

||||

|

||||

call(channel.send(file=file, content=f"Here is what I have generated", embed=embed))

|

||||

|

||||

return f"Image created: {response['data'][0]['url']}"

|

||||

|

||||

def download_image(url: str):

|

||||

file_name = f"{datetime.now().strftime(FILE_NAME_FORMAT)}.jpg"

|

||||

full_path = f"{PERSISTENT_DIR}{file_name}"

|

||||

urllib.request.urlretrieve(url, full_path)

|

||||

return file_name

|

||||

|

||||

|

||||

### Agent capabilities

|

||||

### These functions are called by the agent to perform actions

|

||||

###

|

||||

def save(memory, agent_actions={}, localagi=None):

|

||||

q = json.loads(memory)

|

||||

logger.info(">>> saving to memories: ")

|

||||

logger.info(q["content"])

|

||||

if MILVUS_HOST == "":

|

||||

chroma_client = Chroma(collection_name=MEMORY_COLLECTION,embedding_function=embeddings, persist_directory=DB_DIR)

|

||||

else:

|

||||

chroma_client = Milvus(collection_name=MEMORY_COLLECTION,embedding_function=embeddings, connection_args={"host": MILVUS_HOST, "port": MILVUS_PORT})

|

||||

chroma_client.add_texts([q["content"]],[{"id": str(uuid.uuid4())}])

|

||||

if MILVUS_HOST == "":

|

||||

chroma_client.persist()

|

||||

chroma_client = None

|

||||

return f"The object was saved permanently to memory."

|

||||

|

||||

def search_memory(query, agent_actions={}, localagi=None):

|

||||

q = json.loads(query)

|

||||

if MILVUS_HOST == "":

|

||||

chroma_client = Chroma(collection_name=MEMORY_COLLECTION,embedding_function=embeddings, persist_directory=DB_DIR)

|

||||

else:

|

||||

chroma_client = Milvus(collection_name=MEMORY_COLLECTION,embedding_function=embeddings, connection_args={"host": MILVUS_HOST, "port": MILVUS_PORT})

|

||||

#docs = chroma_client.search(q["keywords"], "mmr")

|

||||

retriever = chroma_client.as_retriever(search_type=MEMORY_SEARCH_TYPE, search_kwargs={"k": MEMORY_RESULTS})

|

||||

|

||||

docs = retriever.get_relevant_documents(q["keywords"])

|

||||

text_res="Memories found in the database:\n"

|

||||

|

||||

sources = set() # To store unique sources

|

||||

|

||||

# Collect unique sources

|

||||

for document in docs:

|

||||

if "source" in document.metadata:

|

||||

sources.add(document.metadata["source"])

|

||||

|

||||

for doc in docs:

|

||||

# drop newlines from page_content

|

||||

content = doc.page_content.replace("\n", " ")

|

||||

content = " ".join(content.split())

|

||||

text_res+="- "+content+"\n"

|

||||

|

||||

# Print the relevant sources used for the answer

|

||||

for source in sources:

|

||||

if source.startswith("http"):

|

||||

text_res += "" + source + "\n"

|

||||

|

||||

chroma_client = None

|

||||

#if args.postprocess:

|

||||

# return post_process(text_res)

|

||||

return text_res

|

||||

#return localagi.post_process(text_res)

|

||||

|

||||

# write file to disk with content

|

||||

def save_file(arg, agent_actions={}, localagi=None):

|

||||

arg = json.loads(arg)

|

||||

file = filename = arg["filename"]

|

||||

content = arg["content"]

|

||||

# create persistent dir if does not exist

|

||||

if not os.path.exists(PERSISTENT_DIR):

|

||||

os.makedirs(PERSISTENT_DIR)

|

||||

# write the file in the directory specified

|

||||

file = os.path.join(PERSISTENT_DIR, filename)

|

||||

|

||||

# Check if the file already exists

|

||||

if os.path.exists(file):

|

||||

mode = 'a' # Append mode

|

||||

else:

|

||||

mode = 'w' # Write mode

|

||||

|

||||

with open(file, mode) as f:

|

||||

f.write(content)

|

||||

|

||||

file = discord.File(file, filename=filename)

|

||||

call(channel.send(file=file, content=f"Here is what I have generated"))

|

||||

return f"File {file} saved successfully."

|

||||

|

||||

def ddg(query: str, num_results: int, backend: str = "api") -> List[Dict[str, str]]:

|

||||

"""Run query through DuckDuckGo and return metadata.

|

||||

|

||||

Args:

|

||||

query: The query to search for.

|

||||

num_results: The number of results to return.

|

||||

|

||||

Returns:

|

||||

A list of dictionaries with the following keys:

|

||||

snippet - The description of the result.

|

||||

title - The title of the result.

|

||||

link - The link to the result.

|

||||

"""

|

||||

ddgs = DDGS()

|

||||

try:

|

||||

results = ddgs.text(

|

||||

query,

|

||||

backend=backend,

|

||||

)

|

||||

if results is None:

|

||||

return [{"Result": "No good DuckDuckGo Search Result was found"}]

|

||||

|

||||

def to_metadata(result: Dict) -> Dict[str, str]:

|

||||

if backend == "news":

|

||||

return {

|

||||

"date": result["date"],

|

||||

"title": result["title"],

|

||||

"snippet": result["body"],

|

||||

"source": result["source"],

|

||||

"link": result["url"],

|

||||

}

|

||||

return {

|

||||

"snippet": result["body"],

|

||||

"title": result["title"],

|

||||

"link": result["href"],

|

||||

}

|

||||

|

||||

formatted_results = []

|

||||

for i, res in enumerate(results, 1):

|

||||

if res is not None:

|

||||

formatted_results.append(to_metadata(res))

|

||||

if len(formatted_results) == num_results:

|

||||

break

|

||||

except Exception as e:

|

||||

logger.error(e)

|

||||

return []

|

||||

return formatted_results

|

||||

|

||||

## Search on duckduckgo

|

||||

def search_duckduckgo(a, agent_actions={}, localagi=None):

|

||||

a = json.loads(a)

|

||||

list=ddg(a["query"], 2)

|

||||

|

||||

text_res=""

|

||||

for doc in list:

|

||||

text_res+=f"""{doc["link"]}: {doc["title"]} {doc["snippet"]}\n"""

|

||||

|

||||

#if args.postprocess:

|

||||

# return post_process(text_res)

|

||||

return text_res

|

||||

#l = json.dumps(list)

|

||||

#return l

|

||||

|

||||

### End Agent capabilities

|

||||

###

|

||||

|

||||

### Agent action definitions

|

||||

agent_actions = {

|

||||

"generate_picture": {

|

||||

"function": create_image,

|

||||

"plannable": True,

|

||||

"description": 'For creating a picture, the assistant replies with "generate_picture" and a detailed description, enhancing it with as much detail as possible.',

|

||||

"signature": {

|

||||

"name": "generate_picture",

|

||||

"parameters": {

|

||||

"type": "object",

|

||||

"properties": {

|

||||

"description": {

|

||||

"type": "string",

|

||||

},

|

||||

"width": {

|

||||

"type": "number",

|

||||

},

|

||||

"height": {

|

||||

"type": "number",

|

||||

},

|

||||

},

|

||||

}

|

||||

},

|

||||

},

|

||||

"search_internet": {

|

||||

"function": search_duckduckgo,

|

||||

"plannable": True,

|

||||

"description": 'For searching the internet with a query, the assistant replies with the action "search_internet" and the query to search.',

|

||||

"signature": {

|

||||

"name": "search_internet",

|

||||

"description": """For searching internet.""",

|

||||

"parameters": {

|

||||

"type": "object",

|

||||

"properties": {

|

||||

"query": {

|

||||

"type": "string",

|

||||

"description": "information to save"

|

||||

},

|

||||

},

|

||||

}

|

||||

},

|

||||

},

|

||||

"save_file": {

|

||||

"function": save_file,

|

||||

"plannable": True,

|

||||

"description": 'The assistant replies with the action "save_file", the filename and content to save for writing a file to disk permanently. This can be used to store the result of complex actions locally.',

|

||||

"signature": {

|

||||

"name": "save_file",

|

||||

"description": """For saving a file to disk with content.""",

|

||||

"parameters": {

|

||||

"type": "object",

|

||||

"properties": {

|

||||

"filename": {

|

||||

"type": "string",

|

||||

"description": "information to save"

|

||||

},

|

||||

"content": {

|

||||

"type": "string",

|

||||

"description": "information to save"

|

||||

},

|

||||

},

|

||||

}

|

||||

},

|

||||

},

|

||||

"ingest": {

|

||||

"function": ingest,

|

||||

"plannable": True,

|

||||

"description": 'The assistant replies with the action "ingest" when there is an url to a sitemap to ingest memories from.',

|

||||

"signature": {

|

||||

"name": "ingest",

|

||||

"description": """Save or store informations into memory.""",

|

||||

"parameters": {

|

||||

"type": "object",

|

||||

"properties": {

|

||||

"url": {

|

||||

"type": "string",

|

||||

"description": "information to save"

|

||||

},

|

||||

},

|

||||

"required": ["url"]

|

||||

}

|

||||

},

|

||||

},

|

||||

"save_memory": {

|

||||

"function": save,

|

||||

"plannable": True,

|

||||

"description": 'The assistant replies with the action "save_memory" and the string to remember or store an information that thinks it is relevant permanently.',

|

||||

"signature": {

|

||||

"name": "save_memory",

|

||||

"description": """Save or store informations into memory.""",

|

||||

"parameters": {

|

||||

"type": "object",

|

||||

"properties": {

|

||||

"content": {

|

||||

"type": "string",

|

||||

"description": "information to save"

|

||||

},

|

||||

},

|

||||

"required": ["content"]

|

||||

}

|

||||

},

|

||||

},

|

||||

"search_memory": {

|

||||

"function": search_memory,

|

||||

"plannable": True,

|

||||

"description": 'The assistant replies with the action "search_memory" for searching between its memories with a query term.',

|

||||

"signature": {

|

||||

"name": "search_memory",

|

||||

"description": """Search in memory""",

|

||||

"parameters": {

|

||||

"type": "object",

|

||||

"properties": {

|

||||

"keywords": {

|

||||

"type": "string",

|

||||

"description": "reasoning behind the intent"

|

||||

},

|

||||

},

|

||||

"required": ["keywords"]

|

||||

}

|

||||

},

|

||||

},

|

||||

}

|

||||

@@ -1,31 +0,0 @@

|

||||

[discord]

|

||||

server_id =

|

||||

api_key =

|

||||

|

||||

[openai]

|

||||

api_key = sl-d-d-d

|

||||

|

||||

[settings]

|

||||

default_size = 1024x1024

|

||||

file_path = images/

|

||||

file_name_format = %Y_%m_%d_%H_%M_%S

|

||||

|

||||

[agent]

|

||||

llm_model = gpt-4

|

||||

tts_model = en-us-kathleen-low.onnx

|

||||

tts_api_base = http://api:8080

|

||||

functions_model = functions

|

||||

api_base = http://api:8080

|

||||

stablediffusion_api_base = http://api:8080

|

||||

stablediffusion_model = stablediffusion

|

||||

embeddings_model = all-MiniLM-L6-v2

|

||||

embeddings_api_base = http://api:30316/v1

|

||||

persistent_dir = /tmp/data

|

||||

db_dir = /tmp/data/db

|

||||

milvus_host =

|

||||

milvus_port =

|

||||

memory_collection = localai

|

||||

memory_chunk_size = 600

|

||||

memory_chunk_overlap = 110

|

||||

memory_results = 3

|

||||

memory_search_type = mmr

|

||||

@@ -1,5 +0,0 @@

|

||||

from configparser import ConfigParser

|

||||

|

||||

config_file = "config.ini"

|

||||

config = ConfigParser(interpolation=None)

|

||||

config.read(config_file)

|

||||

@@ -1,6 +0,0 @@

|

||||

#!/bin/bash

|

||||

|

||||

pip uninstall hnswlib chromadb-hnswlib -y

|

||||

pip install hnswlib chromadb-hnswlib

|

||||

cd /app

|

||||

python3 /app/main.py

|

||||

@@ -1,292 +0,0 @@

|

||||

"""

|

||||

This is a discord bot for generating images using OpenAI's DALL-E

|

||||

|

||||

Author: Stefan Rial

|

||||

YouTube: https://youtube.com/@StefanRial

|

||||

GitHub: https://https://github.com/StefanRial/ClaudeBot

|

||||

E-Mail: mail.stefanrial@gmail.com

|

||||

"""

|

||||

|

||||

from config import config

|

||||

import os

|

||||

|

||||

OPENAI_API_KEY = config["openai"][str("api_key")]

|

||||

|

||||

if OPENAI_API_KEY == "":

|

||||

OPENAI_API_KEY = "foo"

|

||||

if "OPENAI_API_BASE" not in os.environ:

|

||||

os.environ["OPENAI_API_BASE"] = config["agent"]["api_base"]

|

||||

os.environ["OPENAI_API_KEY"] = OPENAI_API_KEY

|

||||

import openai

|

||||

|

||||

import discord

|

||||

|

||||

import urllib.request

|

||||

from datetime import datetime

|

||||

from queue import Queue

|

||||

import agent

|

||||

from agent import agent_actions

|

||||

from localagi import LocalAGI

|

||||

import asyncio

|

||||

import threading

|

||||

from discord import app_commands

|

||||

import functools

|

||||

import typing

|

||||

|

||||

SERVER_ID = config["discord"]["server_id"]

|

||||

DISCORD_API_KEY = config["discord"][str("api_key")]

|

||||

OPENAI_ORG = config["openai"][str("organization")]

|

||||

|

||||

|

||||

|

||||

FILE_PATH = config["settings"][str("file_path")]

|

||||

FILE_NAME_FORMAT = config["settings"][str("file_name_format")]

|

||||

CRITIC = config["settings"]["critic"] if "critic" in config["settings"] else False

|

||||

SIZE_LARGE = "1024x1024"

|

||||

SIZE_MEDIUM = "512x512"

|

||||

SIZE_SMALL = "256x256"

|

||||

SIZE_DEFAULT = config["settings"][str("default_size")]

|

||||

|

||||

GUILD = discord.Object(id=SERVER_ID)

|

||||

|

||||

if not os.path.isdir(FILE_PATH):

|

||||

os.mkdir(FILE_PATH)

|

||||

|

||||

|

||||

class Client(discord.Client):

|

||||

def __init__(self, *, intents: discord.Intents):

|

||||

super().__init__(intents=intents)

|

||||

self.tree = app_commands.CommandTree(self)

|

||||

|

||||

async def setup_hook(self):

|

||||

self.tree.copy_global_to(guild=GUILD)

|

||||

await self.tree.sync(guild=GUILD)

|

||||

|

||||

|

||||

claude_intents = discord.Intents.default()

|

||||

claude_intents.messages = True

|

||||

claude_intents.message_content = True

|

||||

client = Client(intents=claude_intents)

|

||||

|

||||

openai.organization = OPENAI_ORG

|

||||

openai.api_key = OPENAI_API_KEY

|

||||

openai.Model.list()

|

||||

|

||||

|

||||

async def close_thread(thread: discord.Thread):

|

||||

await thread.edit(name="closed")

|

||||

await thread.send(

|

||||

embed=discord.Embed(

|

||||

description="**Thread closed** - Context limit reached, closing...",

|

||||

color=discord.Color.blue(),

|

||||

)

|

||||

)

|

||||

await thread.edit(archived=True, locked=True)

|

||||

|

||||

@client.event

|

||||

async def on_ready():

|

||||

print(f"We have logged in as {client.user}")

|

||||

|

||||

def diff(history, processed):

|

||||

return [item for item in processed if item not in history]

|

||||

|

||||

def analyze_history(history, processed, callback, channel):

|

||||

diff_list = diff(history, processed)

|

||||

for item in diff_list:

|

||||

if item["role"] == "function":

|

||||

content = item["content"]

|

||||

# Function result

|

||||

callback(channel.send(f"⚙️ Processed: {content}"))

|

||||

if item["role"] == "assistant" and "function_call" in item:

|

||||

function_name = item["function_call"]["name"]

|

||||

function_parameters = item["function_call"]["arguments"]

|

||||

# Function call

|

||||

callback(channel.send(f"⚙️ Called: {function_name} with {function_parameters}"))

|

||||

|

||||

def run_localagi_thread_history(history, message, thread, loop):

|

||||

agent.channel = message.channel

|

||||

def call(thing):

|

||||

return asyncio.run_coroutine_threadsafe(thing,loop).result()

|

||||

sent_message = call(thread.send(f"⚙️ LocalAGI starts"))

|

||||

|

||||

user = message.author

|

||||

def action_callback(name, parameters):

|

||||

call(sent_message.edit(content=f"⚙️ Calling function '{name}' with {parameters}"))

|

||||

def reasoning_callback(name, reasoning):

|

||||

call(sent_message.edit(content=f"🤔 I'm thinking... '{reasoning}' (calling '{name}'), please wait.."))

|

||||

|

||||

localagi = LocalAGI(

|

||||

agent_actions=agent_actions,

|

||||

llm_model=config["agent"]["llm_model"],

|

||||

tts_model=config["agent"]["tts_model"],

|

||||

action_callback=action_callback,

|

||||

reasoning_callback=reasoning_callback,

|

||||

tts_api_base=config["agent"]["tts_api_base"],

|

||||

functions_model=config["agent"]["functions_model"],

|

||||

api_base=config["agent"]["api_base"],

|

||||

stablediffusion_api_base=config["agent"]["stablediffusion_api_base"],

|

||||

stablediffusion_model=config["agent"]["stablediffusion_model"],

|

||||

)

|

||||

# remove bot ID from the message content

|

||||

message.content = message.content.replace(f"<@{client.user.id}>", "")

|

||||

conversation_history = localagi.evaluate(

|

||||

message.content,

|

||||

history,

|

||||

subtaskContext=True,

|

||||

critic=CRITIC,

|

||||

)

|

||||

|

||||

analyze_history(history, conversation_history, call, thread)

|

||||

call(sent_message.edit(content=f"<@{user.id}> {conversation_history[-1]['content']}"))

|

||||

|

||||

def run_localagi_message(message, loop):

|

||||

agent.channel = message.channel

|

||||

def call(thing):

|

||||

return asyncio.run_coroutine_threadsafe(thing,loop).result()

|

||||

sent_message = call(message.channel.send(f"⚙️ LocalAGI starts"))

|

||||

|

||||

user = message.author

|

||||

def action_callback(name, parameters):

|

||||

call(sent_message.edit(content=f"⚙️ Calling function '{name}' with {parameters}"))

|

||||

def reasoning_callback(name, reasoning):

|

||||

call(sent_message.edit(content=f"🤔 I'm thinking... '{reasoning}' (calling '{name}'), please wait.."))

|

||||

|

||||

localagi = LocalAGI(

|

||||

agent_actions=agent_actions,

|

||||

llm_model=config["agent"]["llm_model"],

|

||||

tts_model=config["agent"]["tts_model"],

|

||||

action_callback=action_callback,

|

||||

reasoning_callback=reasoning_callback,

|

||||

tts_api_base=config["agent"]["tts_api_base"],

|

||||

functions_model=config["agent"]["functions_model"],

|

||||

api_base=config["agent"]["api_base"],

|

||||

stablediffusion_api_base=config["agent"]["stablediffusion_api_base"],

|

||||

stablediffusion_model=config["agent"]["stablediffusion_model"],

|

||||

)

|

||||

# remove bot ID from the message content

|

||||

message.content = message.content.replace(f"<@{client.user.id}>", "")

|

||||

|

||||

conversation_history = localagi.evaluate(

|

||||

message.content,

|

||||

[],

|

||||

critic=CRITIC,

|

||||

subtaskContext=True,

|

||||

)

|

||||

analyze_history([], conversation_history, call, message.channel)

|

||||

call(sent_message.edit(content=f"<@{user.id}> {conversation_history[-1]['content']}"))

|

||||

|

||||

def run_localagi(interaction, prompt, loop):

|

||||

agent.channel = interaction.channel

|

||||

|

||||

def call(thing):

|

||||

return asyncio.run_coroutine_threadsafe(thing,loop).result()

|

||||

|

||||

user = interaction.user

|

||||

embed = discord.Embed(

|

||||

description=f"<@{user.id}> wants to chat! 🤖💬",

|

||||

color=discord.Color.green(),

|

||||

)

|

||||

embed.add_field(name=user.name, value=prompt)

|

||||

|

||||

call(interaction.response.send_message(embed=embed))

|

||||

response = call(interaction.original_response())

|

||||

|

||||

# create the thread

|

||||

thread = call(response.create_thread(

|

||||

name=prompt,

|

||||

slowmode_delay=1,

|

||||

reason="gpt-bot",

|

||||

auto_archive_duration=60,

|

||||

))

|

||||

thread.typing()

|

||||

|

||||

sent_message = call(thread.send(f"⚙️ LocalAGI starts"))

|

||||

messages = []

|

||||

def action_callback(name, parameters):

|

||||

call(sent_message.edit(content=f"⚙️ Calling function '{name}' with {parameters}"))

|

||||

def reasoning_callback(name, reasoning):

|

||||

call(sent_message.edit(content=f"🤔 I'm thinking... '{reasoning}' (calling '{name}'), please wait.."))

|

||||

|

||||

localagi = LocalAGI(

|

||||

agent_actions=agent_actions,

|

||||

llm_model=config["agent"]["llm_model"],

|

||||

tts_model=config["agent"]["tts_model"],

|

||||

action_callback=action_callback,

|

||||

reasoning_callback=reasoning_callback,

|

||||

tts_api_base=config["agent"]["tts_api_base"],

|

||||

functions_model=config["agent"]["functions_model"],

|

||||

api_base=config["agent"]["api_base"],

|

||||

stablediffusion_api_base=config["agent"]["stablediffusion_api_base"],

|

||||

stablediffusion_model=config["agent"]["stablediffusion_model"],

|

||||

)

|

||||

# remove bot ID from the message content

|

||||

prompt = prompt.replace(f"<@{client.user.id}>", "")

|

||||

|

||||

conversation_history = localagi.evaluate(

|

||||

prompt,

|

||||

messages,

|

||||

subtaskContext=True,

|

||||

critic=CRITIC,

|

||||

)

|

||||

analyze_history(messages, conversation_history, call, interaction.channel)

|

||||

call(sent_message.edit(content=f"<@{user.id}> {conversation_history[-1]['content']}"))

|

||||

|

||||

@client.tree.command()

|

||||

@app_commands.describe(prompt="Ask me anything!")

|

||||

async def localai(interaction: discord.Interaction, prompt: str):

|

||||

loop = asyncio.get_running_loop()

|

||||

threading.Thread(target=run_localagi, args=[interaction, prompt,loop]).start()

|

||||

|

||||

# https://github.com/openai/gpt-discord-bot/blob/1161634a59c6fb642e58edb4f4fa1a46d2883d3b/src/utils.py#L15

|

||||

def discord_message_to_message(message):

|

||||

if (

|

||||

message.type == discord.MessageType.thread_starter_message

|

||||

and message.reference.cached_message

|

||||

and len(message.reference.cached_message.embeds) > 0

|

||||

and len(message.reference.cached_message.embeds[0].fields) > 0

|

||||

):

|

||||

field = message.reference.cached_message.embeds[0].fields[0]

|

||||

if field.value:

|

||||

return { "role": "user", "content": field.value }

|

||||

else:

|

||||

if message.content:

|

||||

return { "role": "user", "content": message.content }

|

||||

return None

|

||||

|

||||

@client.event

|

||||

async def on_ready():

|

||||

loop = asyncio.get_running_loop()

|

||||

agent.loop = loop

|

||||

|

||||

@client.event

|

||||

async def on_message(message):

|

||||

# ignore messages from the bot

|

||||

if message.author == client.user:

|

||||

return

|

||||

loop = asyncio.get_running_loop()

|

||||

# ignore messages not in a thread

|

||||

channel = message.channel

|

||||

if not isinstance(channel, discord.Thread) and client.user.mentioned_in(message):

|

||||

threading.Thread(target=run_localagi_message, args=[message,loop]).start()

|

||||

return

|

||||

if not isinstance(channel, discord.Thread):

|

||||

return

|

||||

# ignore threads not created by the bot

|

||||

thread = channel

|

||||

if thread.owner_id != client.user.id:

|

||||

return

|

||||

|

||||

if thread.message_count > 5:

|

||||

# too many messages, no longer going to reply

|

||||

await close_thread(thread=thread)

|

||||

return

|

||||

|

||||

channel_messages = [

|

||||

discord_message_to_message(message)

|

||||

async for message in thread.history(limit=5)

|

||||

]

|

||||

channel_messages = [x for x in channel_messages if x is not None]

|

||||

channel_messages.reverse()

|

||||