Compare commits

486 Commits

module

...

fix-mcp-co

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

be8d914bb6 | ||

|

|

12209ab926 | ||

|

|

547e9cd0c4 | ||

|

|

6a1e536ca7 | ||

|

|

eb8663ada1 | ||

|

|

ce997d2425 | ||

|

|

56cd0e05ca | ||

|

|

25bb3fb123 | ||

|

|

9e52438877 | ||

|

|

c4618896cf | ||

|

|

ee1667d51a | ||

|

|

bafd26e92c | ||

|

|

8ecc18f76f | ||

|

|

985f07a529 | ||

|

|

8b2900c6d8 | ||

|

|

50e56fe22f | ||

|

|

b5a12a1da6 | ||

|

|

70e749b53a | ||

|

|

784a4c7969 | ||

|

|

43a2a142fa | ||

|

|

8ee5956bdb | ||

|

|

4888dfcdca | ||

|

|

a6b41fd3ab | ||

|

|

d25aed9a1a | ||

|

|

4a3f471f72 | ||

|

|

93154a0a27 | ||

|

|

59ab91d7df | ||

|

|

42590a7371 | ||

|

|

6260d4f168 | ||

|

|

4206da92a6 | ||

|

|

4d6fbf1caa | ||

|

|

97ef7acec0 | ||

|

|

77189b6114 | ||

|

|

c32d315910 | ||

|

|

606ffd8275 | ||

|

|

601dba3fc4 | ||

|

|

00ab476a77 | ||

|

|

906079cbbb | ||

|

|

808d9c981c | ||

|

|

2b79c99dd7 | ||

|

|

77905ed3cd | ||

|

|

60c249f19a | ||

|

|

209a9989c4 | ||

|

|

5105b46f48 | ||

|

|

e4c7d1acfc | ||

|

|

dd4fbd64d3 | ||

|

|

4010f9d86c | ||

|

|

0fda6e38db | ||

|

|

bffb5bd852 | ||

|

|

4d722c35d3 | ||

|

|

8dd0c3883b | ||

|

|

c2ec333777 | ||

|

|

2f19feff5e | ||

|

|

e128cde613 | ||

|

|

bc7f6f059c | ||

|

|

0eb68b6c20 | ||

|

|

1c4ab09335 | ||

|

|

abc7d6e080 | ||

|

|

cb15f926e8 | ||

|

|

70282535d4 | ||

|

|

5111738b3b | ||

|

|

c141a9bd80 | ||

|

|

c8cf70b1d0 | ||

|

|

3f83f5c4b0 | ||

|

|

45fbfed030 | ||

|

|

2b3f61aed1 | ||

|

|

e7111c6554 | ||

|

|

894dde9256 | ||

|

|

446908b759 | ||

|

|

18364d169e | ||

|

|

6464a33912 | ||

|

|

34caeea081 | ||

|

|

25286a828c | ||

|

|

6747fe87f2 | ||

|

|

09559f9ed9 | ||

|

|

4107a7a063 | ||

|

|

e3d4177c53 | ||

|

|

ee77bba615 | ||

|

|

0709f2f1ff | ||

|

|

a569e37a34 | ||

|

|

1eee5b5a32 | ||

|

|

ffee9d8307 | ||

|

|

ff20a0332e | ||

|

|

034f596e06 | ||

|

|

daa7dcd12a | ||

|

|

b81f34a8f8 | ||

|

|

6d9f1a95cc | ||

|

|

9f77bb99f1 | ||

|

|

74fdfd7a55 | ||

|

|

7494aa9c26 | ||

|

|

e90c192063 | ||

|

|

53d135bec9 | ||

|

|

99e0011920 | ||

|

|

5023bc77f4 | ||

|

|

a5ba49ec93 | ||

|

|

f3c06b1bfb | ||

|

|

86cb9f1282 | ||

|

|

d8cf5b419b | ||

|

|

bb4459b99f | ||

|

|

ab3e6ae3c8 | ||

|

|

1f8c601795 | ||

|

|

f70985362d | ||

|

|

cafaa0e153 | ||

|

|

491354280b | ||

|

|

4c40e47e8d | ||

|

|

045fb1f8d6 | ||

|

|

8e703c0ac2 | ||

|

|

29beee6057 | ||

|

|

d672842a81 | ||

|

|

45078e1fa7 | ||

|

|

c96c8d8009 | ||

|

|

11231f23ea | ||

|

|

c1dcda42ae | ||

|

|

7b52b9c22d | ||

|

|

dff678fc4e | ||

|

|

e0703cdb7c | ||

|

|

c940141e61 | ||

|

|

5fdd464fad | ||

|

|

68cfdecaee | ||

|

|

906b4ebd76 | ||

|

|

05cb8ba2eb | ||

|

|

0644daa477 | ||

|

|

62940a1a56 | ||

|

|

c6ce1c324f | ||

|

|

383fc1d0f4 | ||

|

|

8ac6f68568 | ||

|

|

05af5d9695 | ||

|

|

2c273392cd | ||

|

|

08f5417e96 | ||

|

|

f67ebe8c7a | ||

|

|

6ace4ab60d | ||

|

|

319caf8e91 | ||

|

|

7fb99ecf21 | ||

|

|

d520d88301 | ||

|

|

4dcc77372d | ||

|

|

0f2731f9e8 | ||

|

|

6e888f6008 | ||

|

|

2713349c75 | ||

|

|

b6cd62a8a3 | ||

|

|

dd6739cbbf | ||

|

|

5cd0eaae3f | ||

|

|

9d6b81d9c2 | ||

|

|

d5df14a714 | ||

|

|

8e9b87bcb1 | ||

|

|

3e36b09376 | ||

|

|

074aefd0df | ||

|

|

3e1081fc6e | ||

|

|

73af9538eb | ||

|

|

959dd8c7f3 | ||

|

|

71e66c651c | ||

|

|

438a65caf6 | ||

|

|

fb20bbe5bf | ||

|

|

fa12dba7c2 | ||

|

|

54c8bf5f1a | ||

|

|

7bc44167cf | ||

|

|

88933784de | ||

|

|

d7b503e30c | ||

|

|

e1e708ee75 | ||

|

|

9d81eb7509 | ||

|

|

ddc7d0e100 | ||

|

|

e26b55a6a8 | ||

|

|

ca3420c791 | ||

|

|

abd6d1bbf7 | ||

|

|

d0cfc4c317 | ||

|

|

53c1554d55 | ||

|

|

b09749dddb | ||

|

|

b199c10ab7 | ||

|

|

c8abc5f28f | ||

|

|

14948c965d | ||

|

|

558306a841 | ||

|

|

fb41663330 | ||

|

|

84836b8345 | ||

|

|

5f2a2eaa24 | ||

|

|

75a8d63e83 | ||

|

|

f0b8bfb4f4 | ||

|

|

fa25e7c077 | ||

|

|

3a9169bdbe | ||

|

|

3a921f6241 | ||

|

|

c1ac7b675a | ||

|

|

d689bb4331 | ||

|

|

b42ef27641 | ||

|

|

abb3ffc109 | ||

|

|

e5e238efc0 | ||

|

|

33483ab4b9 | ||

|

|

638eedc2a0 | ||

|

|

86d3596f41 | ||

|

|

16288c0fc3 | ||

|

|

5d42ebbc71 | ||

|

|

0513a327f6 | ||

|

|

c3d3bba32a | ||

|

|

1b187444fc | ||

|

|

d54abc3ed0 | ||

|

|

1e5b3f501f | ||

|

|

0e240077ab | ||

|

|

401172631d | ||

|

|

5b8ca0b756 | ||

|

|

8be14b7e3f | ||

|

|

96de3bdddd | ||

|

|

56d209f95d | ||

|

|

169c5e8aad | ||

|

|

d7cfa7f0b2 | ||

|

|

43a46ad1fb | ||

|

|

2de5152bfd | ||

|

|

a83f4512b6 | ||

|

|

08785e2908 | ||

|

|

8e694f70ec | ||

|

|

f0bd184fbd | ||

|

|

e32a569796 | ||

|

|

31b5849d02 | ||

|

|

29a8713427 | ||

|

|

3c3b5a774c | ||

|

|

35c75b61d8 | ||

|

|

33b5b8c8f4 | ||

|

|

dc2570c90b | ||

|

|

aea0b424b9 | ||

|

|

5e73be42cb | ||

|

|

53ebcdad5d | ||

|

|

a1cdabd0a8 | ||

|

|

26bcdf72a2 | ||

|

|

9347193fdc | ||

|

|

efc82bde30 | ||

|

|

6a451267d5 | ||

|

|

9ee0d89a6b | ||

|

|

10f7c8ff13 | ||

|

|

c69ee9e5f7 | ||

|

|

0ad2de72e0 | ||

|

|

1e484d7ccd | ||

|

|

7486e68a17 | ||

|

|

3763f320b9 | ||

|

|

16e0836fc7 | ||

|

|

6954ad3217 | ||

|

|

69e043f8e1 | ||

|

|

d451919414 | ||

|

|

9ff2fde44f | ||

|

|

40b0d4bfc9 | ||

|

|

14a70c3edd | ||

|

|

0b71d8dc10 | ||

|

|

bc60dde94f | ||

|

|

28e80084f6 | ||

|

|

7be93fb014 | ||

|

|

5ecb97e845 | ||

|

|

3827ebebdf | ||

|

|

106d1e61d4 | ||

|

|

b884d9433a | ||

|

|

f2e7010297 | ||

|

|

1e1c123d84 | ||

|

|

51ba87a7ba | ||

|

|

bf8d8be5ad | ||

|

|

311c0bb5ee | ||

|

|

7492a3ab3b | ||

|

|

127c76d006 | ||

|

|

2942668d89 | ||

|

|

d288755444 | ||

|

|

758a73e8ab | ||

|

|

5dd4b9cb65 | ||

|

|

d714c4f80b | ||

|

|

173eda4fb3 | ||

|

|

365f89cd5e | ||

|

|

5e52383a99 | ||

|

|

f6e16be170 | ||

|

|

5721c52c0d | ||

|

|

6c83f3d089 | ||

|

|

3a7e56cdf1 | ||

|

|

89d7da3817 | ||

|

|

7a98408336 | ||

|

|

e696c5ae31 | ||

|

|

042c1ee65c | ||

|

|

3a7b5e1461 | ||

|

|

4d6b04c6ed | ||

|

|

70c389ce0b | ||

|

|

5b4f618ca3 | ||

|

|

8492c95cb6 | ||

|

|

a75feaab4e | ||

|

|

3790ad3666 | ||

|

|

a57f990576 | ||

|

|

76a01994f9 | ||

|

|

4520d16522 | ||

|

|

0dce524c7a | ||

|

|

0b78956cc4 | ||

|

|

43352376e3 | ||

|

|

d3f2126614 | ||

|

|

cf112d57a6 | ||

|

|

f28725199c | ||

|

|

fbcc618355 | ||

|

|

094580724f | ||

|

|

371ea63f5a | ||

|

|

bc431ea6ff | ||

|

|

5c6df5adc0 | ||

|

|

46085077a5 | ||

|

|

33f1c102e4 | ||

|

|

b66e698b5e | ||

|

|

43c29fbdb0 | ||

|

|

0a18d8409e | ||

|

|

0139b79835 | ||

|

|

296734ba3b | ||

|

|

d73fd545b2 | ||

|

|

fa1ae086bf | ||

|

|

96091a1ad5 | ||

|

|

ac6c03bcb7 | ||

|

|

6ae019db23 | ||

|

|

2a6650c3ea | ||

|

|

5e6863379c | ||

|

|

8c447a0cf8 | ||

|

|

c68ff23b01 | ||

|

|

735bab5e32 | ||

|

|

c2035c95c5 | ||

|

|

351aa80b74 | ||

|

|

0fc09b4f49 | ||

|

|

1900915609 | ||

|

|

6bafb48cec | ||

|

|

2561f2f175 | ||

|

|

d053dc249c | ||

|

|

6318f1aa97 | ||

|

|

a204b3677b | ||

|

|

b1d90dbedd | ||

|

|

361927b0a4 | ||

|

|

b010f48ddc | ||

|

|

6d866985e8 | ||

|

|

989a2421ba | ||

|

|

3f9b454276 | ||

|

|

fdd4af1042 | ||

|

|

24ef65f70d | ||

|

|

5c3497a433 | ||

|

|

9974dec2e1 | ||

|

|

773259ceaa | ||

|

|

1fc0db18cd | ||

|

|

249cedd9d1 | ||

|

|

bc7192d664 | ||

|

|

22160cbf9e | ||

|

|

34f6d821b9 | ||

|

|

e2aa3bedd7 | ||

|

|

f6dcc09562 | ||

|

|

08563c3286 | ||

|

|

2cba2eafe6 | ||

|

|

c0773f03f8 | ||

|

|

bad3e53f06 | ||

|

|

96f7a4653f | ||

|

|

cac986d287 | ||

|

|

4a42ec0487 | ||

|

|

9d0dcf792a | ||

|

|

324cbcd2a6 | ||

|

|

35d9ba44f5 | ||

|

|

4ed61daf8e | ||

|

|

56a6a10980 | ||

|

|

ca57f4cd85 | ||

|

|

609b2a2b7c | ||

|

|

55b7b4a41e | ||

|

|

7db2b10bd2 | ||

|

|

f0bc2be678 | ||

|

|

ac8f6e94ff | ||

|

|

27f7299749 | ||

|

|

eda99d05f4 | ||

|

|

0bc95cfd16 | ||

|

|

80508001c7 | ||

|

|

7c0f615952 | ||

|

|

54de6221d1 | ||

|

|

74b158e9f1 | ||

|

|

4b41653d00 | ||

|

|

03175d36ab | ||

|

|

e6fdd91369 | ||

|

|

97f5ba16cd | ||

|

|

677a96074b | ||

|

|

82f1b37ae8 | ||

|

|

adf649be99 | ||

|

|

26baaecbcd | ||

|

|

fac211d8ee | ||

|

|

84a808fb7e | ||

|

|

6528da804f | ||

|

|

3170ee0ba2 | ||

|

|

3295942e59 | ||

|

|

fa39ae93f1 | ||

|

|

d237e17719 | ||

|

|

cb35f871db | ||

|

|

f85962769a | ||

|

|

3b361d1a3d | ||

|

|

ac580b562e | ||

|

|

230d012915 | ||

|

|

0dda705017 | ||

|

|

69cbcc5c88 | ||

|

|

437da44590 | ||

|

|

82ac74ac5d | ||

|

|

7c70f09834 | ||

|

|

73524adfce | ||

|

|

48d17b6952 | ||

|

|

db490fb3ca | ||

|

|

a1edf005a9 | ||

|

|

78ba7871e9 | ||

|

|

36abf837a9 | ||

|

|

4453f43bec | ||

|

|

414a973282 | ||

|

|

80361a6400 | ||

|

|

f11c8f3f57 | ||

|

|

c56e277a2b | ||

|

|

cf3c4549da | ||

|

|

66b1847644 | ||

|

|

533caeee96 | ||

|

|

185fb89d39 | ||

|

|

7adcce78be | ||

|

|

dbfc596333 | ||

|

|

dda993e43b | ||

|

|

00078b5da8 | ||

|

|

a4a2a172f5 | ||

|

|

8db36b4619 | ||

|

|

59b91d1403 | ||

|

|

23867bf0e6 | ||

|

|

27202c3622 | ||

|

|

dc72904cee | ||

|

|

8ceaab2ac1 | ||

|

|

015b26096f | ||

|

|

215c3ddbf7 | ||

|

|

84c56f6c3e | ||

|

|

90fd130e31 | ||

|

|

9f5a32a1bf | ||

|

|

13b08c8cb3 | ||

|

|

4f9ec2896b | ||

|

|

e3987e9bd1 | ||

|

|

899e3c0771 | ||

|

|

f2c74e29e8 | ||

|

|

5f29125bbb | ||

|

|

32f4e53242 | ||

|

|

3f6b68a60a | ||

|

|

9e8b1dab84 | ||

|

|

652cef288d | ||

|

|

744af19025 | ||

|

|

5c58072ad7 | ||

|

|

98c53e042d | ||

|

|

79e5dffe09 | ||

|

|

b4fd482f66 | ||

|

|

9173156e40 | ||

|

|

936b2af4ca | ||

|

|

9df690999c | ||

|

|

7db9aea57b | ||

|

|

41692d700c | ||

|

|

e6090c62cf | ||

|

|

a404b75fbe | ||

|

|

58ba4db1dd | ||

|

|

9417c5ca8f | ||

|

|

8e3a1fcbe5 | ||

|

|

7c679ead94 | ||

|

|

b45490e84d | ||

|

|

2ebbf1007f | ||

|

|

fdb0585dcb | ||

|

|

56ceedb4fb | ||

|

|

f2e09dfe81 | ||

|

|

eb4294bdbb | ||

|

|

3b1a54083d | ||

|

|

aa62d9ef9e | ||

|

|

8601956e53 | ||

|

|

11486d4720 | ||

|

|

658681f344 | ||

|

|

9926674c38 | ||

|

|

61e4be0d0c | ||

|

|

d52e3b8309 | ||

|

|

2cc907cbd7 | ||

|

|

3790a872ea | ||

|

|

d22154e9be | ||

|

|

a1203c8f14 | ||

|

|

509080c8f2 | ||

|

|

2535895214 | ||

|

|

60e9d66b36 | ||

|

|

df15681400 | ||

|

|

f1b39164ef | ||

|

|

7e3f2cbffb | ||

|

|

0f953d8ad9 | ||

|

|

491a0f2b3d | ||

|

|

e606d637e2 | ||

|

|

ab24a2a7cf | ||

|

|

8164190425 | ||

|

|

d1ae51ae5d | ||

|

|

f740f307e2 | ||

|

|

d5f72a6c82 | ||

|

|

87736e464a | ||

|

|

414f9ca765 | ||

|

|

a7462249a7 | ||

|

|

317b91a7e9 | ||

|

|

ee8351a637 | ||

|

|

d01ee8e27b | ||

|

|

d08a097175 | ||

|

|

42d35dd7a3 | ||

|

|

a823131a2d | ||

|

|

d32940e604 | ||

|

|

11514a0e0c | ||

|

|

ee535536ad | ||

|

|

1ad68eca01 | ||

|

|

d57db7449a | ||

|

|

a3300dfb6d |

@@ -1,2 +1,3 @@

|

||||

models/

|

||||

db/

|

||||

data/

|

||||

volumes/

|

||||

|

||||

26

.env

26

.env

@@ -1,26 +0,0 @@

|

||||

# Enable debug mode in the LocalAI API

|

||||

DEBUG=true

|

||||

|

||||

# Where models are stored

|

||||

MODELS_PATH=/models

|

||||

|

||||

# Galleries to use

|

||||

GALLERIES=[{"name":"model-gallery", "url":"github:go-skynet/model-gallery/index.yaml"}, {"url": "github:go-skynet/model-gallery/huggingface.yaml","name":"huggingface"}]

|

||||

|

||||

# Select model configuration in the config directory

|

||||

#PRELOAD_MODELS_CONFIG=/config/wizardlm-13b.yaml

|

||||

PRELOAD_MODELS_CONFIG=/config/wizardlm-13b.yaml

|

||||

#PRELOAD_MODELS_CONFIG=/config/wizardlm-13b-superhot.yaml

|

||||

|

||||

# You don't need to put a valid OpenAI key, however, the python libraries expect

|

||||

# the string to be set or panics

|

||||

OPENAI_API_KEY=sk---

|

||||

|

||||

# Set the OpenAI API base URL to point to LocalAI

|

||||

DEFAULT_API_BASE=http://api:8080

|

||||

|

||||

# Set an image path

|

||||

IMAGE_PATH=/tmp

|

||||

|

||||

# Set number of default threads

|

||||

THREADS=14

|

||||

19

.github/dependabot.yml

vendored

Normal file

19

.github/dependabot.yml

vendored

Normal file

@@ -0,0 +1,19 @@

|

||||

# https://docs.github.com/en/code-security/dependabot/working-with-dependabot/dependabot-options-reference#package-ecosystem-

|

||||

version: 2

|

||||

updates:

|

||||

- package-ecosystem: "github-actions"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "monthly"

|

||||

- package-ecosystem: "docker"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "monthly"

|

||||

- package-ecosystem: "gomod"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

- package-ecosystem: "bun"

|

||||

directory: "/webui/react-ui"

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

32

.github/workflows/goreleaser.yml

vendored

Normal file

32

.github/workflows/goreleaser.yml

vendored

Normal file

@@ -0,0 +1,32 @@

|

||||

name: goreleaser

|

||||

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- 'v*' # Add this line to trigger the workflow on tag pushes that match 'v*'

|

||||

|

||||

permissions:

|

||||

id-token: write

|

||||

contents: read

|

||||

|

||||

jobs:

|

||||

goreleaser:

|

||||

permissions:

|

||||

contents: write

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

- name: Set up Go

|

||||

uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: 1.24

|

||||

- name: Run GoReleaser

|

||||

uses: goreleaser/goreleaser-action@v6

|

||||

with:

|

||||

version: '~> v2'

|

||||

args: release --clean

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

160

.github/workflows/image.yml

vendored

Normal file

160

.github/workflows/image.yml

vendored

Normal file

@@ -0,0 +1,160 @@

|

||||

---

|

||||

name: 'build container images'

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

tags:

|

||||

- '*'

|

||||

concurrency:

|

||||

group: ci-image-${{ github.head_ref || github.ref }}-${{ github.repository }}

|

||||

cancel-in-progress: true

|

||||

jobs:

|

||||

containerImages:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Prepare

|

||||

id: prep

|

||||

run: |

|

||||

DOCKER_IMAGE=quay.io/mudler/localagi

|

||||

# Use branch name as default

|

||||

VERSION=${GITHUB_REF#refs/heads/}

|

||||

BINARY_VERSION=$(git describe --always --tags --dirty)

|

||||

SHORTREF=${GITHUB_SHA::8}

|

||||

# If this is git tag, use the tag name as a docker tag

|

||||

if [[ $GITHUB_REF == refs/tags/* ]]; then

|

||||

VERSION=${GITHUB_REF#refs/tags/}

|

||||

fi

|

||||

TAGS="${DOCKER_IMAGE}:${VERSION},${DOCKER_IMAGE}:${SHORTREF}"

|

||||

# If the VERSION looks like a version number, assume that

|

||||

# this is the most recent version of the image and also

|

||||

# tag it 'latest'.

|

||||

if [[ $VERSION =~ ^[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}$ ]]; then

|

||||

TAGS="$TAGS,${DOCKER_IMAGE}:latest"

|

||||

fi

|

||||

# Set output parameters.

|

||||

echo ::set-output name=binary_version::${BINARY_VERSION}

|

||||

echo ::set-output name=tags::${TAGS}

|

||||

echo ::set-output name=docker_image::${DOCKER_IMAGE}

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@master

|

||||

with:

|

||||

platforms: all

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

id: buildx

|

||||

uses: docker/setup-buildx-action@master

|

||||

|

||||

- name: Login to DockerHub

|

||||

if: github.event_name != 'pull_request'

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

registry: quay.io

|

||||

username: ${{ secrets.DOCKER_USERNAME }}

|

||||

password: ${{ secrets.DOCKER_PASSWORD }}

|

||||

- name: Extract metadata (tags, labels) for Docker

|

||||

id: meta

|

||||

uses: docker/metadata-action@902fa8ec7d6ecbf8d84d538b9b233a880e428804

|

||||

with:

|

||||

images: quay.io/mudler/localagi

|

||||

tags: |

|

||||

type=ref,event=branch,suffix=-{{date 'YYYYMMDDHHmmss'}}

|

||||

type=semver,pattern={{raw}}

|

||||

type=sha,suffix=-{{date 'YYYYMMDDHHmmss'}}

|

||||

type=ref,event=branch

|

||||

flavor: |

|

||||

latest=auto

|

||||

prefix=

|

||||

suffix=

|

||||

|

||||

- name: Build

|

||||

uses: docker/build-push-action@v6

|

||||

with:

|

||||

builder: ${{ steps.buildx.outputs.name }}

|

||||

build-args: |

|

||||

VERSION=${{ steps.prep.outputs.binary_version }}

|

||||

context: ./

|

||||

file: ./Dockerfile.webui

|

||||

#platforms: linux/amd64,linux/arm64

|

||||

platforms: linux/amd64

|

||||

push: true

|

||||

#tags: ${{ steps.prep.outputs.tags }}

|

||||

tags: ${{ steps.meta.outputs.tags }}

|

||||

labels: ${{ steps.meta.outputs.labels }}

|

||||

mcpbox-build:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Prepare

|

||||

id: prep

|

||||

run: |

|

||||

DOCKER_IMAGE=quay.io/mudler/localagi-mcpbox

|

||||

# Use branch name as default

|

||||

VERSION=${GITHUB_REF#refs/heads/}

|

||||

BINARY_VERSION=$(git describe --always --tags --dirty)

|

||||

SHORTREF=${GITHUB_SHA::8}

|

||||

# If this is git tag, use the tag name as a docker tag

|

||||

if [[ $GITHUB_REF == refs/tags/* ]]; then

|

||||

VERSION=${GITHUB_REF#refs/tags/}

|

||||

fi

|

||||

TAGS="${DOCKER_IMAGE}:${VERSION},${DOCKER_IMAGE}:${SHORTREF}"

|

||||

# If the VERSION looks like a version number, assume that

|

||||

# this is the most recent version of the image and also

|

||||

# tag it 'latest'.

|

||||

if [[ $VERSION =~ ^[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}$ ]]; then

|

||||

TAGS="$TAGS,${DOCKER_IMAGE}:latest"

|

||||

fi

|

||||

# Set output parameters.

|

||||

echo ::set-output name=binary_version::${BINARY_VERSION}

|

||||

echo ::set-output name=tags::${TAGS}

|

||||

echo ::set-output name=docker_image::${DOCKER_IMAGE}

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@master

|

||||

with:

|

||||

platforms: all

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

id: buildx

|

||||

uses: docker/setup-buildx-action@master

|

||||

|

||||

- name: Login to DockerHub

|

||||

if: github.event_name != 'pull_request'

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

registry: quay.io

|

||||

username: ${{ secrets.DOCKER_USERNAME }}

|

||||

password: ${{ secrets.DOCKER_PASSWORD }}

|

||||

- name: Extract metadata (tags, labels) for Docker

|

||||

id: meta

|

||||

uses: docker/metadata-action@902fa8ec7d6ecbf8d84d538b9b233a880e428804

|

||||

with:

|

||||

images: quay.io/mudler/localagi-mcpbox

|

||||

tags: |

|

||||

type=ref,event=branch,suffix=-{{date 'YYYYMMDDHHmmss'}}

|

||||

type=semver,pattern={{raw}}

|

||||

type=sha,suffix=-{{date 'YYYYMMDDHHmmss'}}

|

||||

type=ref,event=branch

|

||||

flavor: |

|

||||

latest=auto

|

||||

prefix=

|

||||

suffix=

|

||||

|

||||

- name: Build

|

||||

uses: docker/build-push-action@v6

|

||||

with:

|

||||

builder: ${{ steps.buildx.outputs.name }}

|

||||

build-args: |

|

||||

VERSION=${{ steps.prep.outputs.binary_version }}

|

||||

context: ./

|

||||

file: ./Dockerfile.mcpbox

|

||||

#platforms: linux/amd64,linux/arm64

|

||||

platforms: linux/amd64

|

||||

push: true

|

||||

#tags: ${{ steps.prep.outputs.tags }}

|

||||

tags: ${{ steps.meta.outputs.tags }}

|

||||

labels: ${{ steps.meta.outputs.labels }}

|

||||

50

.github/workflows/tests.yml

vendored

Normal file

50

.github/workflows/tests.yml

vendored

Normal file

@@ -0,0 +1,50 @@

|

||||

name: Run Go Tests

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- 'main'

|

||||

pull_request:

|

||||

branches:

|

||||

- '**'

|

||||

concurrency:

|

||||

group: ci-tests-${{ github.head_ref || github.ref }}-${{ github.repository }}

|

||||

cancel-in-progress: true

|

||||

jobs:

|

||||

test:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

- run: |

|

||||

# Add Docker's official GPG key:

|

||||

sudo apt-get update

|

||||

sudo apt-get install ca-certificates curl

|

||||

sudo install -m 0755 -d /etc/apt/keyrings

|

||||

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

|

||||

sudo chmod a+r /etc/apt/keyrings/docker.asc

|

||||

|

||||

# Add the repository to Apt sources:

|

||||

echo \

|

||||

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \

|

||||

$(. /etc/os-release && echo "${UBUNTU_CODENAME:-$VERSION_CODENAME}") stable" | \

|

||||

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

|

||||

sudo apt-get update

|

||||

sudo apt-get install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

|

||||

docker version

|

||||

|

||||

docker run --rm hello-world

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: '>=1.17.0'

|

||||

- name: Run tests

|

||||

run: |

|

||||

sudo apt-get update && sudo apt-get install -y make

|

||||

make tests

|

||||

#sudo mv coverage/coverage.txt coverage.txt

|

||||

#sudo chmod 777 coverage.txt

|

||||

|

||||

# - name: Upload coverage to Codecov

|

||||

# uses: codecov/codecov-action@v4

|

||||

# with:

|

||||

# token: ${{ secrets.CODECOV_TOKEN }}

|

||||

10

.gitignore

vendored

10

.gitignore

vendored

@@ -1,2 +1,10 @@

|

||||

db/

|

||||

models/

|

||||

data/

|

||||

pool

|

||||

uploads/

|

||||

local-agent-framework

|

||||

localagi

|

||||

LocalAGI

|

||||

**/.env

|

||||

.vscode

|

||||

volumes/

|

||||

|

||||

40

.goreleaser.yml

Normal file

40

.goreleaser.yml

Normal file

@@ -0,0 +1,40 @@

|

||||

# Make sure to check the documentation at http://goreleaser.com

|

||||

version: 2

|

||||

builds:

|

||||

- main: ./

|

||||

id: "localagi"

|

||||

binary: localagi

|

||||

ldflags:

|

||||

- -w -s

|

||||

# - -X github.com/internal.Version={{.Tag}}

|

||||

# - -X github.com/internal.Commit={{.Commit}}

|

||||

env:

|

||||

- CGO_ENABLED=0

|

||||

goos:

|

||||

- linux

|

||||

- windows

|

||||

- darwin

|

||||

- freebsd

|

||||

goarch:

|

||||

- amd64

|

||||

- arm

|

||||

- arm64

|

||||

source:

|

||||

enabled: true

|

||||

name_template: '{{ .ProjectName }}-{{ .Tag }}-source'

|

||||

archives:

|

||||

# Default template uses underscores instead of -

|

||||

- name_template: >-

|

||||

{{ .ProjectName }}-{{ .Tag }}-

|

||||

{{- if eq .Os "freebsd" }}FreeBSD

|

||||

{{- else }}{{- title .Os }}{{end}}-

|

||||

{{- if eq .Arch "amd64" }}x86_64

|

||||

{{- else if eq .Arch "386" }}i386

|

||||

{{- else }}{{ .Arch }}{{end}}

|

||||

{{- if .Arm }}v{{ .Arm }}{{ end }}

|

||||

checksum:

|

||||

name_template: '{{ .ProjectName }}-{{ .Tag }}-checksums.txt'

|

||||

snapshot:

|

||||

name_template: "{{ .Tag }}-next"

|

||||

changelog:

|

||||

use: github-native

|

||||

18

Dockerfile

18

Dockerfile

@@ -1,18 +0,0 @@

|

||||

FROM python:3.10-bullseye

|

||||

WORKDIR /app

|

||||

COPY ./requirements.txt /app/requirements.txt

|

||||

RUN pip install --no-cache-dir -r requirements.txt

|

||||

|

||||

|

||||

ENV DEBIAN_FRONTEND noninteractive

|

||||

|

||||

# Install package dependencies

|

||||

RUN apt-get update -y && \

|

||||

apt-get install -y --no-install-recommends \

|

||||

alsa-utils \

|

||||

libsndfile1-dev && \

|

||||

apt-get clean

|

||||

|

||||

COPY . /app

|

||||

RUN pip install .

|

||||

ENTRYPOINT [ "python", "./main.py" ];

|

||||

47

Dockerfile.mcpbox

Normal file

47

Dockerfile.mcpbox

Normal file

@@ -0,0 +1,47 @@

|

||||

# Build stage

|

||||

FROM golang:1.24-alpine AS builder

|

||||

|

||||

# Install build dependencies

|

||||

RUN apk add --no-cache git

|

||||

|

||||

# Set working directory

|

||||

WORKDIR /app

|

||||

|

||||

# Copy go mod files

|

||||

COPY go.mod go.sum ./

|

||||

|

||||

# Download dependencies

|

||||

RUN go mod download

|

||||

|

||||

# Copy source code

|

||||

COPY . .

|

||||

|

||||

# Build the application

|

||||

RUN CGO_ENABLED=0 GOOS=linux go build -o mcpbox ./cmd/mcpbox

|

||||

|

||||

# Final stage

|

||||

FROM alpine:3.19

|

||||

|

||||

# Install runtime dependencies

|

||||

RUN apk add --no-cache ca-certificates tzdata docker

|

||||

|

||||

# Create non-root user

|

||||

#RUN adduser -D -g '' appuser

|

||||

|

||||

# Set working directory

|

||||

WORKDIR /app

|

||||

|

||||

# Copy binary from builder

|

||||

COPY --from=builder /app/mcpbox .

|

||||

|

||||

# Use non-root user

|

||||

#USER appuser

|

||||

|

||||

# Expose port

|

||||

EXPOSE 8080

|

||||

|

||||

# Set entrypoint

|

||||

ENTRYPOINT ["/app/mcpbox"]

|

||||

|

||||

# Default command

|

||||

CMD ["-addr", ":8080"]

|

||||

12

Dockerfile.realtimesst

Normal file

12

Dockerfile.realtimesst

Normal file

@@ -0,0 +1,12 @@

|

||||

# python

|

||||

FROM python:3.13-slim

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive

|

||||

RUN apt-get update && apt-get install -y python3-dev portaudio19-dev ffmpeg build-essential

|

||||

|

||||

RUN pip install RealtimeSTT

|

||||

|

||||

#COPY ./example/realtimesst /app

|

||||

# https://github.com/KoljaB/RealtimeSTT/blob/master/RealtimeSTT_server/README.md#server-usage

|

||||

ENTRYPOINT ["stt-server"]

|

||||

#ENTRYPOINT [ "/app/main.py" ]

|

||||

55

Dockerfile.webui

Normal file

55

Dockerfile.webui

Normal file

@@ -0,0 +1,55 @@

|

||||

# Use Bun container for building the React UI

|

||||

FROM oven/bun:1 AS ui-builder

|

||||

|

||||

# Set the working directory for the React UI

|

||||

WORKDIR /app

|

||||

|

||||

# Copy package.json and bun.lockb (if exists)

|

||||

COPY webui/react-ui/package.json webui/react-ui/bun.lockb* ./

|

||||

|

||||

# Install dependencies

|

||||

RUN bun install --frozen-lockfile

|

||||

|

||||

# Copy the rest of the React UI source code

|

||||

COPY webui/react-ui/ ./

|

||||

|

||||

# Build the React UI

|

||||

RUN bun run build

|

||||

|

||||

# Use a temporary build image based on Golang 1.24-alpine

|

||||

FROM golang:1.24-alpine AS builder

|

||||

|

||||

# Define argument for linker flags

|

||||

ARG LDFLAGS="-s -w"

|

||||

|

||||

# Install git

|

||||

RUN apk add --no-cache git

|

||||

RUN rm -rf /tmp/* /var/cache/apk/*

|

||||

|

||||

# Set the working directory

|

||||

WORKDIR /work

|

||||

|

||||

# Copy go.mod and go.sum files first to leverage Docker cache

|

||||

COPY go.mod go.sum ./

|

||||

|

||||

# Download dependencies - this layer will be cached as long as go.mod and go.sum don't change

|

||||

RUN go mod download

|

||||

|

||||

# Now copy the rest of the source code

|

||||

COPY . .

|

||||

|

||||

# Copy the built React UI from the ui-builder stage

|

||||

COPY --from=ui-builder /app/dist /work/webui/react-ui/dist

|

||||

|

||||

# Build the application

|

||||

RUN CGO_ENABLED=0 go build -ldflags="$LDFLAGS" -o localagi ./

|

||||

|

||||

FROM scratch

|

||||

|

||||

# Copy the webui binary from the builder stage to the final image

|

||||

COPY --from=builder /work/localagi /localagi

|

||||

COPY --from=builder /etc/ssl/ /etc/ssl/

|

||||

COPY --from=builder /tmp /tmp

|

||||

|

||||

# Define the command that will be run when the container is started

|

||||

ENTRYPOINT ["/localagi"]

|

||||

2

LICENSE

2

LICENSE

@@ -1,6 +1,6 @@

|

||||

MIT License

|

||||

|

||||

Copyright (c) 2023 Ettore Di Giacinto

|

||||

Copyright (c) 2023-2025 Ettore Di Giacinto (mudler@localai.io)

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

|

||||

40

Makefile

Normal file

40

Makefile

Normal file

@@ -0,0 +1,40 @@

|

||||

GOCMD?=go

|

||||

IMAGE_NAME?=webui

|

||||

MCPBOX_IMAGE_NAME?=mcpbox

|

||||

ROOT_DIR:=$(shell dirname $(realpath $(lastword $(MAKEFILE_LIST))))

|

||||

|

||||

prepare-tests: build-mcpbox

|

||||

docker compose up -d --build

|

||||

docker run -d -v /var/run/docker.sock:/var/run/docker.sock --privileged -p 9090:8080 --rm -ti $(MCPBOX_IMAGE_NAME)

|

||||

|

||||

cleanup-tests:

|

||||

docker compose down

|

||||

|

||||

tests: prepare-tests

|

||||

LOCALAGI_MCPBOX_URL="http://localhost:9090" LOCALAGI_MODEL="gemma-3-12b-it-qat" LOCALAI_API_URL="http://localhost:8081" LOCALAGI_API_URL="http://localhost:8080" $(GOCMD) run github.com/onsi/ginkgo/v2/ginkgo --fail-fast -v -r ./...

|

||||

|

||||

run-nokb:

|

||||

$(MAKE) run KBDISABLEINDEX=true

|

||||

|

||||

webui/react-ui/dist:

|

||||

docker run --entrypoint /bin/bash -v $(ROOT_DIR):/app oven/bun:1 -c "cd /app/webui/react-ui && bun install && bun run build"

|

||||

|

||||

.PHONY: build

|

||||

build: webui/react-ui/dist

|

||||

$(GOCMD) build -o localagi ./

|

||||

|

||||

.PHONY: run

|

||||

run: webui/react-ui/dist

|

||||

LOCALAGI_MCPBOX_URL="http://localhost:9090" $(GOCMD) run ./

|

||||

|

||||

build-image:

|

||||

docker build -t $(IMAGE_NAME) -f Dockerfile.webui .

|

||||

|

||||

image-push:

|

||||

docker push $(IMAGE_NAME)

|

||||

|

||||

build-mcpbox:

|

||||

docker build -t $(MCPBOX_IMAGE_NAME) -f Dockerfile.mcpbox .

|

||||

|

||||

run-mcpbox:

|

||||

docker run -v /var/run/docker.sock:/var/run/docker.sock --privileged -p 9090:8080 -ti mcpbox

|

||||

635

README.md

635

README.md

@@ -1,181 +1,520 @@

|

||||

<p align="center">

|

||||

<img src="./webui/react-ui/public/logo_1.png" alt="LocalAGI Logo" width="220"/>

|

||||

</p>

|

||||

|

||||

<h1 align="center">

|

||||

<br>

|

||||

<img height="300" src="https://github.com/mudler/LocalAGI/assets/2420543/b69817ce-2361-4234-a575-8f578e159f33"> <br>

|

||||

LocalAGI

|

||||

<br>

|

||||

</h1>

|

||||

<h3 align="center"><em>Your AI. Your Hardware. Your Rules</em></h3>

|

||||

|

||||

[AutoGPT](https://github.com/Significant-Gravitas/Auto-GPT), [babyAGI](https://github.com/yoheinakajima/babyagi), ... and now LocalAGI!

|

||||

<div align="center">

|

||||

|

||||

LocalAGI is a small 🤖 virtual assistant that you can run locally, made by the [LocalAI](https://github.com/go-skynet/LocalAI) author and powered by it.

|

||||

[](https://goreportcard.com/report/github.com/mudler/LocalAGI)

|

||||

[](https://opensource.org/licenses/MIT)

|

||||

[](https://github.com/mudler/LocalAGI/stargazers)

|

||||

[](https://github.com/mudler/LocalAGI/issues)

|

||||

|

||||

The goal is:

|

||||

- Keep it simple, hackable and easy to understand

|

||||

- No API keys needed, No cloud services needed, 100% Local. Tailored for Local use, however still compatible with OpenAI.

|

||||

- Smart-agent/virtual assistant that can do tasks

|

||||

- Small set of dependencies

|

||||

- Run with Docker/Podman/Containers

|

||||

- Rather than trying to do everything, provide a good starting point for other projects

|

||||

</div>

|

||||

|

||||

Note: Be warned! It was hacked in a weekend, and it's just an experiment to see what can be done with local LLMs.

|

||||

Create customizable AI assistants, automations, chat bots and agents that run 100% locally. No need for agentic Python libraries or cloud service keys, just bring your GPU (or even just CPU) and a web browser.

|

||||

|

||||

|

||||

**LocalAGI** is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. A complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. No clouds. No data leaks. Just pure local AI that works on consumer-grade hardware (CPU and GPU).

|

||||

|

||||

## 🚀 Features

|

||||

## 🛡️ Take Back Your Privacy

|

||||

|

||||

- 🧠 LLM for intent detection

|

||||

- 🧠 Uses functions for actions

|

||||

- 📝 Write to long-term memory

|

||||

- 📖 Read from long-term memory

|

||||

- 🌐 Internet access for search

|

||||

- :card_file_box: Write files

|

||||

- 🔌 Plan steps to achieve a goal

|

||||

- 🤖 Avatar creation with Stable Diffusion

|

||||

- 🗨️ Conversational

|

||||

- 🗣️ Voice synthesis with TTS

|

||||

Are you tired of AI wrappers calling out to cloud APIs, risking your privacy? So were we.

|

||||

|

||||

## Demo

|

||||

LocalAGI ensures your data stays exactly where you want it—on your hardware. No API keys, no cloud subscriptions, no compromise.

|

||||

|

||||

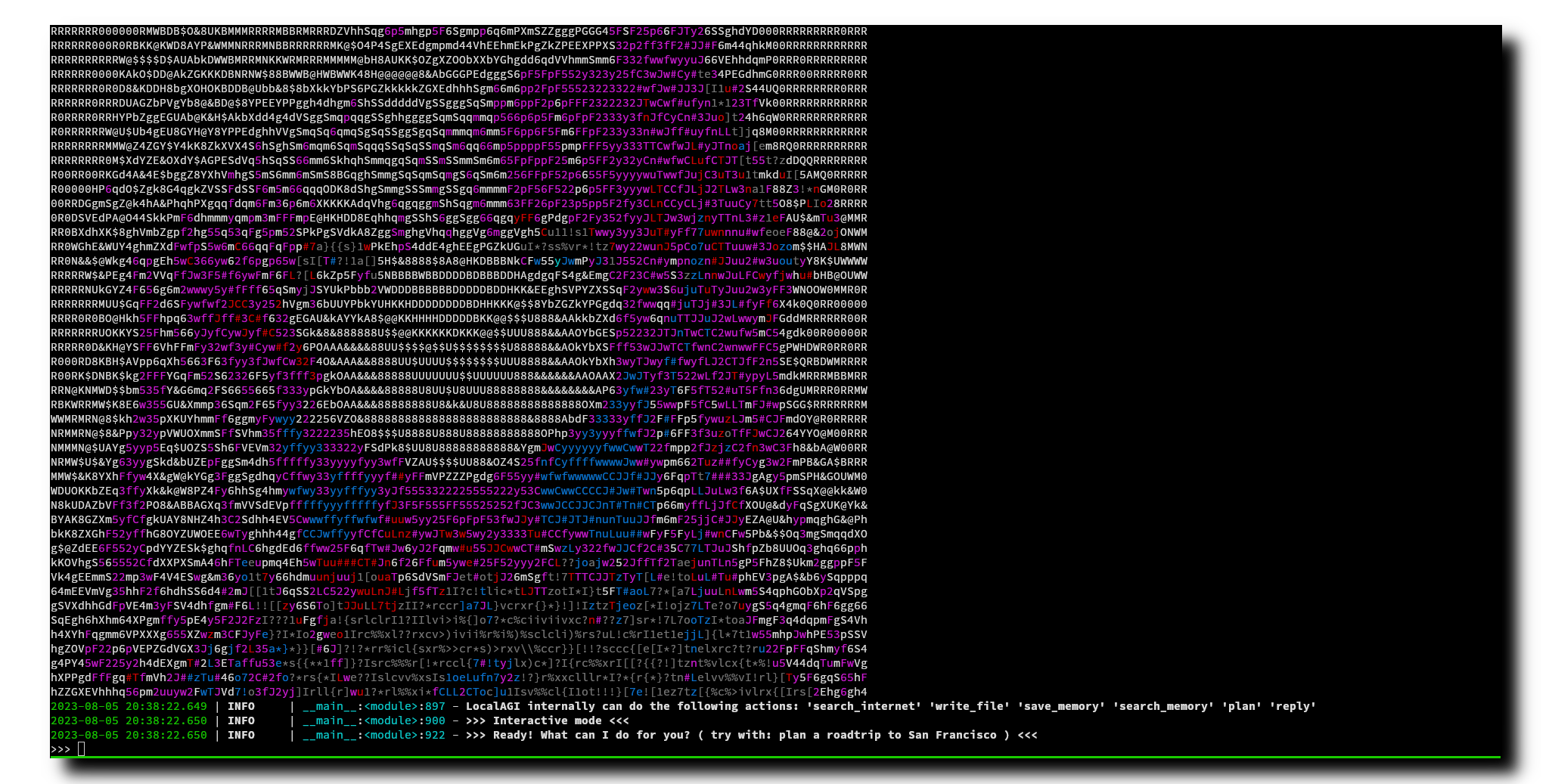

Search on internet (interactive mode)

|

||||

## 🌟 Key Features

|

||||

|

||||

https://github.com/mudler/LocalAGI/assets/2420543/23199ca3-7380-4efc-9fac-a6bc2b52bdb3

|

||||

- 🎛 **No-Code Agents**: Easy-to-configure multiple agents via Web UI.

|

||||

- 🖥 **Web-Based Interface**: Simple and intuitive agent management.

|

||||

- 🤖 **Advanced Agent Teaming**: Instantly create cooperative agent teams from a single prompt.

|

||||

- 📡 **Connectors Galore**: Built-in integrations with Discord, Slack, Telegram, GitHub Issues, and IRC.

|

||||

- 🛠 **Comprehensive REST API**: Seamless integration into your workflows. Every agent created will support OpenAI Responses API out of the box.

|

||||

- 📚 **Short & Long-Term Memory**: Powered by [LocalRecall](https://github.com/mudler/LocalRecall).

|

||||

- 🧠 **Planning & Reasoning**: Agents intelligently plan, reason, and adapt.

|

||||

- 🔄 **Periodic Tasks**: Schedule tasks with cron-like syntax.

|

||||

- 💾 **Memory Management**: Control memory usage with options for long-term and summary memory.

|

||||

- 🖼 **Multimodal Support**: Ready for vision, text, and more.

|

||||

- 🔧 **Extensible Custom Actions**: Easily script dynamic agent behaviors in Go (interpreted, no compilation!).

|

||||

- 🛠 **Fully Customizable Models**: Use your own models or integrate seamlessly with [LocalAI](https://github.com/mudler/LocalAI).

|

||||

- 📊 **Observability**: Monitor agent status and view detailed observable updates in real-time.

|

||||

|

||||

Plan a road trip (batch mode)

|

||||

|

||||

https://github.com/mudler/LocalAGI/assets/2420543/9ba43b82-dec5-432a-bdb9-8318e7db59a4

|

||||

|

||||

> Note: The demo is with a GPU and `30b` models size

|

||||

|

||||

## :book: Quick start

|

||||

|

||||

No frills, just run docker-compose and start chatting with your virtual assistant:

|

||||

## 🛠️ Quickstart

|

||||

|

||||

```bash

|

||||

# Modify the configuration

|

||||

# vim .env

|

||||

docker-compose run -i --rm localagi

|

||||

# Clone the repository

|

||||

git clone https://github.com/mudler/LocalAGI

|

||||

cd LocalAGI

|

||||

|

||||

# CPU setup (default)

|

||||

docker compose up

|

||||

|

||||

# NVIDIA GPU setup

|

||||

docker compose -f docker-compose.nvidia.yaml up

|

||||

|

||||

# Intel GPU setup (for Intel Arc and integrated GPUs)

|

||||

docker compose -f docker-compose.intel.yaml up

|

||||

|

||||

# Start with a specific model (see available models in models.localai.io, or localai.io to use any model in huggingface)

|

||||

MODEL_NAME=gemma-3-12b-it docker compose up

|

||||

|

||||

# NVIDIA GPU setup with custom multimodal and image models

|

||||

MODEL_NAME=gemma-3-12b-it \

|

||||

MULTIMODAL_MODEL=minicpm-v-2_6 \

|

||||

IMAGE_MODEL=flux.1-dev-ggml \

|

||||

docker compose -f docker-compose.nvidia.yaml up

|

||||

```

|

||||

|

||||

## How to use it

|

||||

Now you can access and manage your agents at [http://localhost:8080](http://localhost:8080)

|

||||

|

||||

By default localagi starts in interactive mode

|

||||

Still having issues? see this Youtube video: https://youtu.be/HtVwIxW3ePg

|

||||

|

||||

### Examples

|

||||

## 📚🆕 Local Stack Family

|

||||

|

||||

Road trip planner by limiting searching to internet to 3 results only:

|

||||

🆕 LocalAI is now part of a comprehensive suite of AI tools designed to work together:

|

||||

|

||||

<table>

|

||||

<tr>

|

||||

<td width="50%" valign="top">

|

||||

<a href="https://github.com/mudler/LocalAI">

|

||||

<img src="https://raw.githubusercontent.com/mudler/LocalAI/refs/heads/master/core/http/static/logo_horizontal.png" width="300" alt="LocalAI Logo">

|

||||

</a>

|

||||

</td>

|

||||

<td width="50%" valign="top">

|

||||

<h3><a href="https://github.com/mudler/LocalAI">LocalAI</a></h3>

|

||||

<p>LocalAI is the free, Open Source OpenAI alternative. LocalAI act as a drop-in replacement REST API that's compatible with OpenAI API specifications for local AI inferencing. Does not require GPU.</p>

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="50%" valign="top">

|

||||

<a href="https://github.com/mudler/LocalRecall">

|

||||

<img src="https://raw.githubusercontent.com/mudler/LocalRecall/refs/heads/main/static/localrecall_horizontal.png" width="300" alt="LocalRecall Logo">

|

||||

</a>

|

||||

</td>

|

||||

<td width="50%" valign="top">

|

||||

<h3><a href="https://github.com/mudler/LocalRecall">LocalRecall</a></h3>

|

||||

<p>A REST-ful API and knowledge base management system that provides persistent memory and storage capabilities for AI agents.</p>

|

||||

</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

## 🖥️ Hardware Configurations

|

||||

|

||||

LocalAGI supports multiple hardware configurations through Docker Compose profiles:

|

||||

|

||||

### CPU (Default)

|

||||

- No special configuration needed

|

||||

- Runs on any system with Docker

|

||||

- Best for testing and development

|

||||

- Supports text models only

|

||||

|

||||

### NVIDIA GPU

|

||||

- Requires NVIDIA GPU and drivers

|

||||

- Uses CUDA for acceleration

|

||||

- Best for high-performance inference

|

||||

- Supports text, multimodal, and image generation models

|

||||

- Run with: `docker compose -f docker-compose.nvidia.yaml up`

|

||||

- Default models:

|

||||

- Text: `gemma-3-12b-it-qat`

|

||||

- Multimodal: `minicpm-v-2_6`

|

||||

- Image: `sd-1.5-ggml`

|

||||

- Environment variables:

|

||||

- `MODEL_NAME`: Text model to use

|

||||

- `MULTIMODAL_MODEL`: Multimodal model to use

|

||||

- `IMAGE_MODEL`: Image generation model to use

|

||||

- `LOCALAI_SINGLE_ACTIVE_BACKEND`: Set to `true` to enable single active backend mode

|

||||

|

||||

### Intel GPU

|

||||

- Supports Intel Arc and integrated GPUs

|

||||

- Uses SYCL for acceleration

|

||||

- Best for Intel-based systems

|

||||

- Supports text, multimodal, and image generation models

|

||||

- Run with: `docker compose -f docker-compose.intel.yaml up`

|

||||

- Default models:

|

||||

- Text: `gemma-3-12b-it-qat`

|

||||

- Multimodal: `minicpm-v-2_6`

|

||||

- Image: `sd-1.5-ggml`

|

||||

- Environment variables:

|

||||

- `MODEL_NAME`: Text model to use

|

||||

- `MULTIMODAL_MODEL`: Multimodal model to use

|

||||

- `IMAGE_MODEL`: Image generation model to use

|

||||

- `LOCALAI_SINGLE_ACTIVE_BACKEND`: Set to `true` to enable single active backend mode

|

||||

|

||||

## Customize models

|

||||

|

||||

You can customize the models used by LocalAGI by setting environment variables when running docker-compose. For example:

|

||||

|

||||

```bash

|

||||

docker-compose run -i --rm localagi \

|

||||

--skip-avatar \

|

||||

--subtask-context \

|

||||

--postprocess \

|

||||

--search-results 3 \

|

||||

--prompt "prepare a plan for my roadtrip to san francisco"

|

||||

# CPU with custom model

|

||||

MODEL_NAME=gemma-3-12b-it docker compose up

|

||||

|

||||

# NVIDIA GPU with custom models

|

||||

MODEL_NAME=gemma-3-12b-it \

|

||||

MULTIMODAL_MODEL=minicpm-v-2_6 \

|

||||

IMAGE_MODEL=flux.1-dev-ggml \

|

||||

docker compose -f docker-compose.nvidia.yaml up

|

||||

|

||||

# Intel GPU with custom models

|

||||

MODEL_NAME=gemma-3-12b-it \

|

||||

MULTIMODAL_MODEL=minicpm-v-2_6 \

|

||||

IMAGE_MODEL=sd-1.5-ggml \

|

||||

docker compose -f docker-compose.intel.yaml up

|

||||

```

|

||||

|

||||

Limit results of planning to 3 steps:

|

||||

If no models are specified, it will use the defaults:

|

||||

- Text model: `gemma-3-12b-it-qat`

|

||||

- Multimodal model: `minicpm-v-2_6`

|

||||

- Image model: `sd-1.5-ggml`

|

||||

|

||||

Good (relatively small) models that have been tested are:

|

||||

|

||||

- `qwen_qwq-32b` (best in co-ordinating agents)

|

||||

- `gemma-3-12b-it`

|

||||

- `gemma-3-27b-it`

|

||||

|

||||

## 🏆 Why Choose LocalAGI?

|

||||

|

||||

- **✓ Ultimate Privacy**: No data ever leaves your hardware.

|

||||

- **✓ Flexible Model Integration**: Supports GGUF, GGML, and more thanks to [LocalAI](https://github.com/mudler/LocalAI).

|

||||

- **✓ Developer-Friendly**: Rich APIs and intuitive interfaces.

|

||||

- **✓ Effortless Setup**: Simple Docker compose setups and pre-built binaries.

|

||||

- **✓ Feature-Rich**: From planning to multimodal capabilities, connectors for Slack, MCP support, LocalAGI has it all.

|

||||

|

||||

## 🌐 The Local Ecosystem

|

||||

|

||||

LocalAGI is part of the powerful Local family of privacy-focused AI tools:

|

||||

|

||||

- [**LocalAI**](https://github.com/mudler/LocalAI): Run Large Language Models locally.

|

||||

- [**LocalRecall**](https://github.com/mudler/LocalRecall): Retrieval-Augmented Generation with local storage.

|

||||

- [**LocalAGI**](https://github.com/mudler/LocalAGI): Deploy intelligent AI agents securely and privately.

|

||||

|

||||

## 🌟 Screenshots

|

||||

|

||||

### Powerful Web UI

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Connectors Ready-to-Go

|

||||

|

||||

<p align="center">

|

||||

<img src="https://github.com/user-attachments/assets/4171072f-e4bf-4485-982b-55d55086f8fc" alt="Telegram" width="60"/>

|

||||

<img src="https://github.com/user-attachments/assets/9235da84-0187-4f26-8482-32dcc55702ef" alt="Discord" width="220"/>

|

||||

<img src="https://github.com/user-attachments/assets/a88c3d88-a387-4fb5-b513-22bdd5da7413" alt="Slack" width="220"/>

|

||||

<img src="https://github.com/user-attachments/assets/d249cdf5-ab34-4ab1-afdf-b99e2db182d2" alt="IRC" width="220"/>

|

||||

<img src="https://github.com/user-attachments/assets/52c852b0-4b50-4926-9fa0-aa50613ac622" alt="GitHub" width="220"/>

|

||||

</p>

|

||||

|

||||

## 📖 Full Documentation

|

||||

|

||||

Explore detailed documentation including:

|

||||

- [Installation Options](#installation-options)

|

||||

- [REST API Documentation](#rest-api)

|

||||

- [Connector Configuration](#connectors)

|

||||

- [Agent Configuration](#agent-configuration-reference)

|

||||

|

||||

### Environment Configuration

|

||||

|

||||

LocalAGI supports environment configurations. Note that these environment variables needs to be specified in the localagi container in the docker-compose file to have effect.

|

||||

|

||||

| Variable | What It Does |

|

||||

|----------|--------------|

|

||||

| `LOCALAGI_MODEL` | Your go-to model |

|

||||

| `LOCALAGI_MULTIMODAL_MODEL` | Optional model for multimodal capabilities |

|

||||

| `LOCALAGI_LLM_API_URL` | OpenAI-compatible API server URL |

|

||||

| `LOCALAGI_LLM_API_KEY` | API authentication |

|

||||

| `LOCALAGI_TIMEOUT` | Request timeout settings |

|

||||

| `LOCALAGI_STATE_DIR` | Where state gets stored |

|

||||

| `LOCALAGI_LOCALRAG_URL` | LocalRecall connection |

|

||||

| `LOCALAGI_ENABLE_CONVERSATIONS_LOGGING` | Toggle conversation logs |

|

||||

| `LOCALAGI_API_KEYS` | A comma separated list of api keys used for authentication |

|

||||

|

||||

## Installation Options

|

||||

|

||||

### Pre-Built Binaries

|

||||

|

||||

Download ready-to-run binaries from the [Releases](https://github.com/mudler/LocalAGI/releases) page.

|

||||

|

||||

### Source Build

|

||||

|

||||

Requirements:

|

||||

- Go 1.20+

|

||||

- Git

|

||||

- Bun 1.2+

|

||||

|

||||

```bash

|

||||

docker-compose run -i --rm localagi \

|

||||

--skip-avatar \

|

||||

--subtask-context \

|

||||

--postprocess \

|

||||

--search-results 1 \

|

||||

--prompt "do a plan for my roadtrip to san francisco" \

|

||||

--plan-message "The assistant replies with a plan of 3 steps to answer the request with a list of subtasks with logical steps. The reasoning includes a self-contained, detailed and descriptive instruction to fullfill the task."

|

||||

# Clone repo

|

||||

git clone https://github.com/mudler/LocalAGI.git

|

||||

cd LocalAGI

|

||||

|

||||

# Build it

|

||||

cd webui/react-ui && bun i && bun run build

|

||||

cd ../..

|

||||

go build -o localagi

|

||||

|

||||

# Run it

|

||||

./localagi

|

||||

```

|

||||

|

||||

### Advanced

|

||||

### Development

|

||||

|

||||

localagi has several options in the CLI to tweak the experience:

|

||||

|

||||

- `--system-prompt` is the system prompt to use. If not specified, it will use none.

|

||||

- `--prompt` is the prompt to use for batch mode. If not specified, it will default to interactive mode.

|

||||

- `--interactive` is the interactive mode. When used with `--prompt` will drop you in an interactive session after the first prompt is evaluated.

|

||||

- `--skip-avatar` will skip avatar creation. Useful if you want to run it in a headless environment.

|

||||

- `--re-evaluate` will re-evaluate if another action is needed or we have completed the user request.

|

||||

- `--postprocess` will postprocess the reasoning for analysis.

|

||||

- `--subtask-context` will include context in subtasks.

|

||||

- `--search-results` is the number of search results to use.

|

||||

- `--plan-message` is the message to use during planning. You can override the message for example to force a plan to have a different message.

|

||||

- `--tts-api-base` is the TTS API base. Defaults to `http://api:8080`.

|

||||

- `--localai-api-base` is the LocalAI API base. Defaults to `http://api:8080`.

|

||||

- `--images-api-base` is the Images API base. Defaults to `http://api:8080`.

|

||||

- `--embeddings-api-base` is the Embeddings API base. Defaults to `http://api:8080`.

|

||||

- `--functions-model` is the functions model to use. Defaults to `functions`.

|

||||

- `--embeddings-model` is the embeddings model to use. Defaults to `all-MiniLM-L6-v2`.

|

||||

- `--llm-model` is the LLM model to use. Defaults to `gpt-4`.

|

||||

- `--tts-model` is the TTS model to use. Defaults to `en-us-kathleen-low.onnx`.

|

||||

- `--stablediffusion-model` is the Stable Diffusion model to use. Defaults to `stablediffusion`.

|

||||

- `--stablediffusion-prompt` is the Stable Diffusion prompt to use. Defaults to `DEFAULT_PROMPT`.

|

||||

- `--force-action` will force a specific action.

|

||||

- `--debug` will enable debug mode.

|

||||

|

||||

### Customize

|

||||

|

||||

To use a different model, you can see the examples in the `config` folder.

|

||||

To select a model, modify the `.env` file and change the `PRELOAD_MODELS_CONFIG` variable to use a different configuration file.

|

||||

|

||||

### Caveats

|

||||

|

||||

The "goodness" of a model has a big impact on how LocalAGI works. Currently `13b` models are powerful enough to actually able to perform multi-step tasks or do more actions. However, it is quite slow when running on CPU (no big surprise here).

|

||||

|

||||

The context size is a limitation - you can find in the `config` examples to run with superhot 8k context size, but the quality is not good enough to perform complex tasks.

|

||||

|

||||

## What is LocalAGI?

|

||||

|

||||

It is a dead simple experiment to show how to tie the various LocalAI functionalities to create a virtual assistant that can do tasks. It is simple on purpose, trying to be minimalistic and easy to understand and customize for everyone.

|

||||

|

||||

It is different from babyAGI or AutoGPT as it uses [LocalAI functions](https://localai.io/features/openai-functions/) - it is a from scratch attempt built on purpose to run locally with [LocalAI](https://localai.io) (no API keys needed!) instead of expensive, cloud services. It sets apart from other projects as it strives to be small, and easy to fork on.

|

||||

|

||||

### How it works?

|

||||

|

||||

`LocalAGI` just does the minimal around LocalAI functions to create a virtual assistant that can do generic tasks. It works by an endless loop of `intent detection`, `function invocation`, `self-evaluation` and `reply generation` (if it decides to reply! :)). The agent is capable of planning complex tasks by invoking multiple functions, and remember things from the conversation.

|

||||

|

||||

In a nutshell, it goes like this:

|

||||

|

||||

- Decide based on the conversation history if it needs to take an action by using functions. It uses the LLM to detect the intent from the conversation.

|

||||

- if it need to take an action (e.g. "remember something from the conversation" ) or generate complex tasks ( executing a chain of functions to achieve a goal ) it invokes the functions

|

||||

- it re-evaluates if it needs to do any other action

|

||||

- return the result back to the LLM to generate a reply for the user

|

||||

|

||||

Under the hood LocalAI converts functions to llama.cpp BNF grammars. While OpenAI fine-tuned a model to reply to functions, LocalAI constrains the LLM to follow grammars. This is a much more efficient way to do it, and it is also more flexible as you can define your own functions and grammars. For learning more about this, check out the [LocalAI documentation](https://localai.io/docs/llm) and my tweet that explains how it works under the hoods: https://twitter.com/mudler_it/status/1675524071457533953.

|

||||

|

||||

### Agent functions

|

||||

|

||||

The intention of this project is to keep the agent minimal, so can be built on top of it or forked. The agent is capable of doing the following functions:

|

||||

- remember something from the conversation

|

||||

- recall something from the conversation

|

||||

- search something from the internet

|

||||

- plan a complex task by invoking multiple functions

|

||||

- write files to disk

|

||||

|

||||

## Roadmap

|

||||

|

||||

- [x] 100% Local, with Local AI. NO API KEYS NEEDED!

|

||||

- [x] Create a simple virtual assistant

|

||||

- [x] Make the virtual assistant do functions like store long-term memory and autonomously search between them when needed

|

||||

- [x] Create the assistant avatar with Stable Diffusion

|

||||

- [x] Give it a voice

|

||||

- [ ] Use weaviate instead of Chroma

|

||||

- [ ] Get voice input (push to talk or wakeword)

|

||||

- [ ] Make a REST API (OpenAI compliant?) so can be plugged by e.g. a third party service

|

||||

- [x] Take a system prompt so can act with a "character" (e.g. "answer in rick and morty style")

|

||||

|

||||

## Development

|

||||

|

||||

Run docker-compose with main.py checked-out:

|

||||

The development workflow is similar to the source build, but with additional steps for hot reloading of the frontend:

|

||||

|

||||

```bash

|

||||

docker-compose run -v main.py:/app/main.py -i --rm localagi

|

||||

# Clone repo

|

||||

git clone https://github.com/mudler/LocalAGI.git

|

||||

cd LocalAGI

|

||||

|

||||

# Install dependencies and start frontend development server

|

||||

cd webui/react-ui && bun i && bun run dev

|

||||

```

|

||||

|

||||

## Notes

|

||||

Then in separate terminal:

|

||||

|

||||

- a 13b model is enough for doing contextualized research and search/retrieve memory

|

||||

- a 30b model is enough to generate a roadmap trip plan ( so cool! )

|

||||

- With superhot models looses its magic, but maybe suitable for search

|

||||

- Context size is your enemy. `--postprocess` some times helps, but not always

|

||||

- It can be silly!

|

||||

- It is slow on CPU, don't expect `7b` models to perform good, and `13b` models perform better but on CPU are quite slow.

|

||||

```bash

|

||||

# Start development server

|

||||

cd ../.. && go run main.go

|

||||

```

|

||||

|

||||

> Note: see webui/react-ui/.vite.config.js for env vars that can be used to configure the backend URL

|

||||

|

||||

## CONNECTORS

|

||||

|

||||

Link your agents to the services you already use. Configuration examples below.

|

||||

|

||||

### GitHub Issues

|

||||

|

||||

```json

|

||||

{

|

||||

"token": "YOUR_PAT_TOKEN",

|

||||

"repository": "repo-to-monitor",

|

||||

"owner": "repo-owner",

|

||||

"botUserName": "bot-username"

|

||||

}

|

||||

```

|

||||

|

||||

### Discord

|

||||

|

||||

After [creating your Discord bot](https://discordpy.readthedocs.io/en/stable/discord.html):

|

||||

|

||||

```json

|

||||

{

|

||||

"token": "Bot YOUR_DISCORD_TOKEN",

|

||||

"defaultChannel": "OPTIONAL_CHANNEL_ID"

|

||||

}

|

||||

```

|

||||

> Don't forget to enable "Message Content Intent" in Bot(tab) settings!

|

||||

> Enable " Message Content Intent " in the Bot tab!

|

||||

|

||||

### Slack

|

||||

|

||||

Use the included `slack.yaml` manifest to create your app, then configure:

|

||||

|

||||

```json

|

||||

{

|

||||

"botToken": "xoxb-your-bot-token",

|

||||

"appToken": "xapp-your-app-token"

|

||||

}

|

||||

```

|

||||

|

||||

- Create Oauth token bot token from "OAuth & Permissions" -> "OAuth Tokens for Your Workspace"

|

||||